This guide is still actively under construction. There may be inaccuracies or missing details. Feel free to comment and to point out anything you see wrong or feel you need clarification on details.

This guide has been creating for Q1 of 2025. The Dell PowerEdge R710 has been considered End-of-Life for a number of years, but still has impressive performance and flexibility for its age. The R710 remains extremely popular for use in Home Labs and Legacy Enterprise Systems. Finding OEM parts for the R710 that are Brand New / Sealed may prove difficult in 2025 and beyond, but finding used parts on popular marketplaces is very easy and usually very low cost.

This article will address the issues with Dell Support making it extremely tedious and difficult to maintain and update software and firmware related to the R710, as well as discuss “unsupported hardware configurations”, physical modifications, customizations, networking and storage options.

If you are anything like the thousands of other home lab enthusiasts, the R710 may likely be your first foray into Enterprise Grade Server Hardware. Sometime around 2017, the Dell PowerEdge R710 Server became extremely popular for home labs due to the substantial drop in costs and enormous availability of decommissioned and off-lease R710s. As a result, R710 Parts became plentiful and relatively cheap. But another benefit of ownership for the R710, is the immense amount of information that can be found for how to troubleshoot, upgrade, configure and maintain the R710 in maximally performant, energy efficient, cost-effective and flexible ways.

While you can find a plethora of details directly from Dell about the R710, there are many missing caveats and details that have evolved over the decades since its original offering. Such as “R710 v2 and v3 motherboards” (sometimes referred to as “Gen II” with roman numbers) supporting more CPU models, unsupported memory and hardware configurations, etc.

Dell PowerEdge R710 System Overview

The R710 is an 11th Generation Enterprise Server of the Dell PowerEdge Platform. The R710 has a U2 rack-mountable form factor with a motherboard that consists of 2 CPU sockets, 18 DIMM Slots for DDR3 ECC RAM, 2 Riser Cards with a total of a maximum 5 PCIe 2.0 Slots, with one PCIe 2.0 x8 slot intended for use with the backplane connect to a Storage Controller.

There are 3 different front bezel and storage configurations that the R710 comes in. Two models have 3.5″ drive bays and one has 2.5″ drive bays.

Both form factors also has a “Flex Bay Option” that was seemingly intended for use with a half-height TBU (Tape Backup Unit). But the Flex Bay option for one of the 3.5″ drive day form factors reduces the available 3.5″ bay drive tray slots to only 4 (four) to make room for the flex bay.

The R710 model with a 6 (six) bay 3.5″ drive tray slots with an integrated backplane (Dell P/N: YJ221) is very popular for “Gen I” (Generation 1, or Version 1) motherboards.

Another R710 model often found using the “Gen I” motherboards that also uses 3.5″ Drives has a backplane and front bezel with only (four) bay x 3.5″ HDD/SAS tray slots (Dell P/N: YJ221), leaving room for the “flex bay” option.

The R710 model with 8 (eight) bay x 2.5″ drive tray slots and backplane designed for 2.5″ SAS HDD drives and 2.5″ and 1.8″ SSD drives (MX827) has its optional flex bay access under the LCD screen on the leftmost side, nearest to the 2 (two) front USB 2.0 ports.

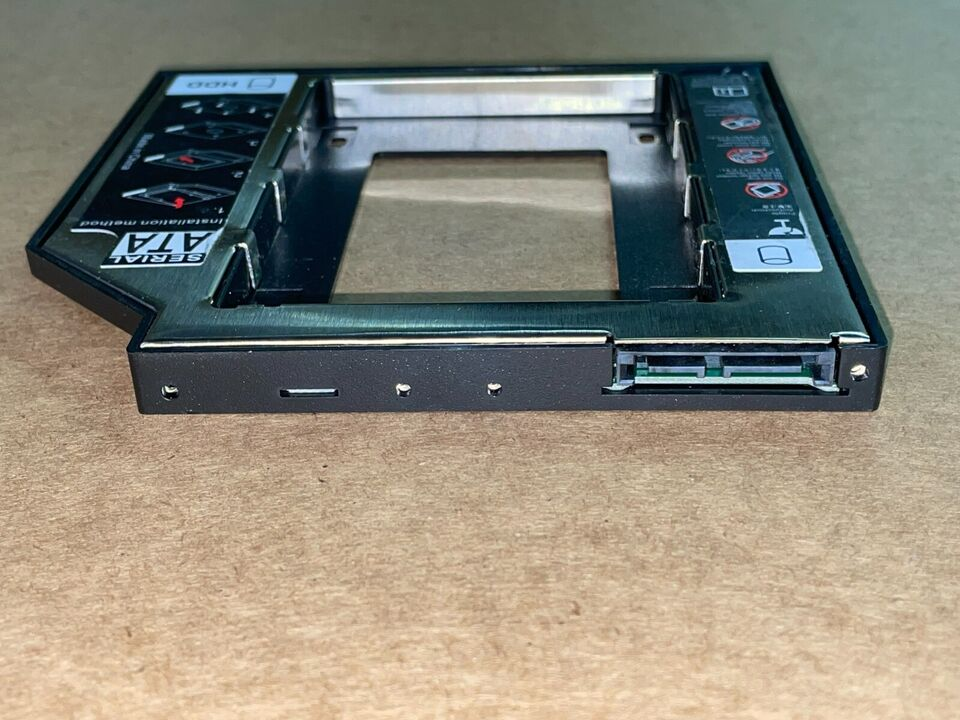

There are 2 onboard SATA Ports that could be used in addition and separately from the front-loaded storage bays. One SATA port is often used with the optional-but-common Slim CD/DVD Drive.

All models also have 2 rear USB 2.0 ports, a daughter card option for adding up to 4 (four) 1Gb RJ45 Ethernet NIC ports, and an option for an integrated IDRAC 6 card. The IDRAC6 port includes a dedicated RJ45 “console” port that can be used to securely access the IDRAC (Dell-specific IPMI) console through a web browser or terminal. There is also a traditional VGA (15-pin Video) port and a COM (9-pin) port integrated into the rear of the R710, as well as a “rack locator beacon” button that “flashes” the front LCD and clears LCD displayed errors when held.

There is an IDRAC LCD on most R710 models, which can be used to configure settings in IDRAC6 (Integrated Dell Remote Access Controller, version 6). You can configure what the LCD displays by default, such as Temperature, Power Consumption, etc. You can also finely tune the needed IP address, subnet, gateway and DNS for IDRAC browser and console access, or the RACADM command-line utility (See here for the RACADM Reference Guide). Some IDRAC6 options also include “vFlash”, in the form of a Dell-specific format of an SD Card, which (reportedly) could function as a PXE server for boot and recovery images.

There is also a single internal USB 2.0 Port just above the front bezel near where what would be just underneath the top “latch” when the “lid” of the R710 server chassis is “locked”. This works best as the bootable USB port, for installing operating systems, and can be configured as a default boot device.

There is a option for an SD Card slot that sits above the backplane on the far leftmost side of the R710 server chassis, as well. It is also possible to boot for this optional SD Card, but it operates on a USB 2.0 bus, so it is subject to the same speed limitations, though larger SD Cards (over 1TB) were tested to work fine with the SD Card option added and present in the system.

Take note that there are differences in “Gen I” and “Gen II” R710 motherboards, mainly the CPU support. The “Gen1” motherboards do NOT support CPUs with a TPD Wattage of 130W, nor the “Low Power 6-core options” for CPUs that were released after original production of the “1st revision” of the Dell R710 motherboard. That said, everything else seems to have stayed the same as far as board configuration.

There is a modular 5 cooling fan “assembly” that draws its cool air from the front bezel, through the back of the R710 with static pressure. Most of the Storage Controller options for the R710, particularly the LSI/ MegaRAID options, have a little-known requirement for a very small amount of CFM “force of air movement” which these fans supply (when the lid is closed). Without the fans blowing, the Storage Controller and onboard chipsets may overheat and cause problems with system health and stability.

Now that we have covered (most of) the “base options” and features of a bare-bones R710, let’s drill down a bit install various components and options.

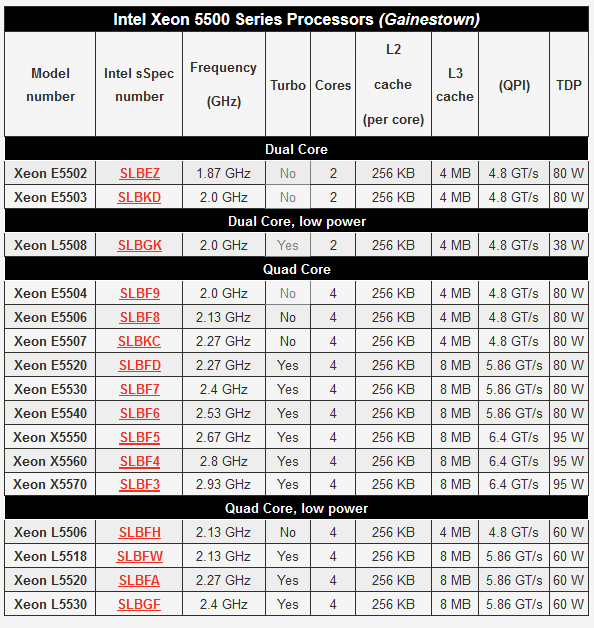

PowerEdge R710 CPUs

Note the there is an enormous amount of CPUs that are “recommended” or “supported” by Dell, but there are some other odd Intel CPUs that have been confirmed to work on the R710. For this reason, it’s likely that you may find a plethora of info on CPUs for the R710 that are not included in the “Official Dell Support” documents. There seems to be a small number of Intel Xeon CPUs that are unlisted but available from smaller countries, also (which can seem pretty sus…).

See here for a link the FlagshipTech’s lists of compatible Intel Xeon CPUs for the R710:

- Intel Xeon 5500 “Gainestown” – https://store.flagshiptech.com/intel-xeon-5500-gainestown-cpus/

- Intel Xeon 5600 “Westmere-EP” – https://store.flagshiptech.com/intel-xeon-5600-westmere-ep-cpus/

If you followed along in the overview section, you will recall there are two different R710 motherboard “generations”: “Gen I” and “Gen II” – both of which have different Dell Part Numbers.

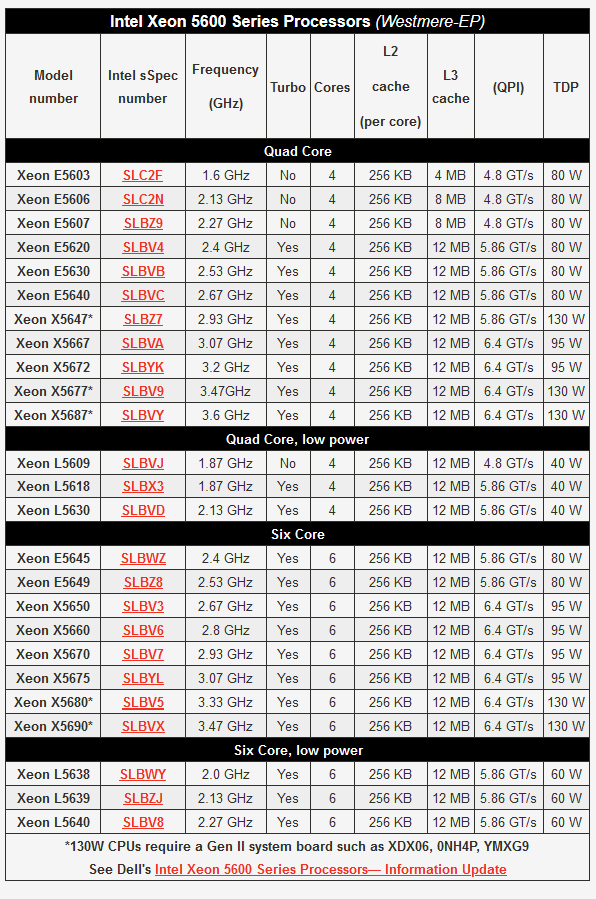

It’s unclear of the exact published date was for this document references (but incorrectly linked to a 404 on the FlagshipTech website…), but it was seemingly around 2010 that Dell posted this article related to the latest-at-that-time Intel Xeon x5600 series CPUs (most likely it was around late 2013).

The above linked document outlines the new hardware characteristics for Low Voltage DDR3 Ram (1.35V instead of 1.5V), new BIOS options and “Dell BIOS Intelligent Turbo Mode”.

So now we can start understanding what our options are for CPUs in a particular R710 motherboard version, since we have a our list of different models (most are included above, but remember: not ALL…). We will first need to get our specific Dell Part Number for our specific Dell R710 motherboard.

- Dell R710 “Gen I” Motherboard Part Numbers: (Least desirable, due to age and CPU limitations)

- YDJK3

- N047H

- 7THW3

- VWN1R

- 0W9X3

- Dell R710 “Gen II” Motherboard Part Numbers: (More desirable, but to having more CPU options)

- XDX06

- 0NH4P

- YMXG9

- 2 x CPU (Intel) sockets

- CPU Compatibility

- Intel(R) Xeon(R) CPU X5680 @ 3.33GHz (Recommended by CE for best performance value)

- CPU Compatibility

If you are wondering, the answer is “Yes” to any question of if you want to use only 1 CPU instead of 2 on the R710. According to Dell:

“Single Processor Configuration

– https://i.dell.com/sites/doccontent/business/solutions/engineering-docs/en/Documents/server-poweredge-r710-tech-guidebook.pdf

The PowerEdge R710 is designed so that a single processor placed in the CPU1 socket functions normally. The system will halt during power-on self-test (POST) if a single processor is placed in the CPU2 socket. If using a single processor, the R710 requires a heatsink blank in the CPU2 socket for thermal reasons.”

Note that you will still need both heatsinks or some kind of a “heatsink blank” (Ebay *MIGHT* have the blank, but the actual heatsink is not much different in price… or some kind of a mod might work? Dell P/N: 0WK640 – https://www.ebay.com/sch/i.html?_nkw=0WK640)

But using only 1 CPU seems like a bit of a waste, doesn’t it? Especially when you can find R710-compatible CPUs for relatively cheap in 2025.

Also, you might be wondering “Do both CPUs in an R710 have to match each other?”

The short answer? Yes, they do.

But there are some nuances to this, according to the very knowledgeable folks over at ServeTheHome Forums when asked about mismatches for CPUs on Dell R710s.

CoolElectricity Recommendations for PowerEdge R710 CPUs

- Best Performance Value in 2025Q1

- NOTE THIS IS RECOMMENDATION IS FOR THOSE WITH “GEN II” MOTHERBOARDS ONLY!

- Intel Xeon X5680 CPU x 2

- The 2nd-most powerful CPUs possible in the R710

- 3.33Ghz with 6 cores and 12 threads (Totaling 24 possible vCPUs with 2x CPUs)

- “Best Bang for the Buck for Performance” in upfront costs, but they require 130W of TPD EACH, so your electricity bill will be higher every month, too.

- “Gen II” motherboard Dell Part Numbers: XDX06, 0NH4P, YMXG9 (there maybe be others)

- Best Energy Efficiency Value in 2025Q1

- This Recommendation is for “Gen I” OR “Gen II” R710 motherboards

- Fastest Clock with the most available cores CPU with lower power consumption

- Intel Xeon L5640 CPU x 2

- 2.26Ghz with 6 cores with 12 threads (Totaling 24 possible vCPUs with 2x CPUs)

- “Best Bang for the Buck for Efficiency” in both upfront cost, performance and with only 60W of TPD each, so your electricity bill will be lower every month, too.

PowerEdge R710 RAM – 18x DDR3 ECC DIMM Slots (3 Channels)

The R710 has 3 memory channels of 6 x DDR3 DIMM slots in each. Each memory channel is divided between each of the 2 possible CPUs, when means that each CPU uses 3 DIMM Slots per channel. Are you confused, yet? Just wait. It gets worse.

While it is technically possible to “mix and match” DDR3 ECC RAM sizes, this must be done in a very particularly way. Larger sized RAM modules must be places in specific DIMM Slots, based on the total memory configuration of the system. Each “side” of each memory channel must have a matching configuration. There are 9 DDR3 DIMM Slots per CPU and 6 different possible DDR3 ECC RAM capacity sizes possible, 3 possible operating

- 18 x DDR3 *BUFFERED* ECC RAM Module Details with R710 Compatibility

- Important Note: Avoid UNBUFFERED ECC RAM – See below for why

- 1066MT/s Speed (clocks down to 800MT/s when using all 3 memory channels)

- 1GB, 2GB, 4GB, 8GB, 16GB, 32GB

- 1333MT/s Speed (1600 MT/s modules will clock down to 1333MT/s and 800MT/s )

- 1GB, 2GB, 4GB, 8GB, 16GB, 32GB

- DDR3 ECC RAM Module Voltage Types

- Standard DDR3 ECC DIMM RAM Module voltage is 1.5V

- Low Voltage “DDR3L” ECC DIMM RAM Module voltage is 1.25V

- “Rank” Options for DDR3 ECC RAM Modules

- Single Rank (Best Compatibility, Fastest, Most Expensive)

- Dual Rank (Good Compatibility, Fast, Best Cost-to-Performance/Compatibility Value)

- Quad Rank (Typically Challenging Compatibility, Various speeds/capacity, Lowest Cost)

- Possible Operating Speeds for DDR3 ECC RAM in The Dell PowerEdge R710

- 1333MT/s (Single Channel, maximum 6 modules when using 2 x CPUs)

- Fastest possible operating speed of RAM for R710, but least capacity

- 1066MT/s (Two Channels, maximum 12 modules when using 2 x CPUs)

- Moderate operating speed of RAM for R710, with moderate capacity (Middle Ground)

- 800MT/s (Three Channels, all 18 DIMM slots populated when using 2 x CPUs)

- Slowest operating speed of RAM for R710, with maximum capacity

- 1333MT/s (Single Channel, maximum 6 modules when using 2 x CPUs)

- Incompatibility and Limited DDR3 ECC RAM Module Details for R710 (NOT RECOMMENDED)

- About Unbuffered ECC Modules (UDIMM):

- 12 x 8GB (96GB – 2 channels) is the maximum capacity of Unbuffered ECC DDR3 RAM

- For this reason, CE does NOT recommend using ANY “Unbuffered” ECC RAM Modules

- Unless you have no other modules and got them free and have zero budget, AVOID!

- About Mixing Different Speeds:

- In some configurations, you can “get it to work” if you mix speeds of modules together, but the system will treat the modules as the same “slowest” clock rate of the module (if it works at all).

- Even though “it might work”, always try to “mirror each side of the memory channel with matching pairs” (Assuming you are using both CPU sockets)

- About Unbuffered ECC Modules (UDIMM):

- About QUAD RANK DDR3 ECC modules in The R710:

- You can NOT use more than 2 channels (12 DIMM slots, 6 per CPU) if you have all Quad Rank Modules.

- For this reason, CE does NOT recommend using ANY Quad Rank Modules that are NOT 32GB, each.

- See the notes below about going beyond the Dell Recommendations and Spec sheet by using 12 x 32GB DDR3 Buffered ECC DIMM Modules to 384GB @1066Mhz

- BUDGET: This means do NOT buy 18 modules for 32GB capacity, only 12 x 32GB

- DDR3 LRDIMM Modules are NOT supported on the R710.

CoolElectricity Recommendations for PowerEdge R710 DDR3 ECC RAM

Since each “side” of every memory channel must have a matching configuration in capacity and “Rank”, and there are 9 total DDR3 DIMM Slots per CPU and 6 different possible DDR3 ECC RAM capacity sizes possible, there is an exponentially complex number of possible caveats and combinations of Single Rank, Dual Rank, Quad Rank, DDR3, DDR3L and 3+ different possible DDR3 RAM speeds. For these reason, CoolElectricity recommends using ALL matching DDR3 ECC DIMM Capacities, to reduce the amount of headache involved in testing the different combinations…

The boot and memory testing processes for the R710 are VERY slow by the Boot Times Standards of Q1 2025. The Dell PowerEdge R710 Server was never designed to be frequently rebooted, and it shows. The R710 was designed to be an “Always-On Production Grade Server”. The sheer amount of time it takes to test 4 or more memory configurations could take up hours of your day.

So since we will now assume that all memory modules will match in capacity, voltage type and “Rank”, this makes it easier to break down into different possible recommendations:

- Best Value for Speed Recommendations for PowerEdge R710 DDR3 ECC RAM Modules and Channels

- 1333MT/s modules if using Single Channel or 1066MT/s

- Dual Rank modules (Quad Rank works for 1/2 channels, but limits capacity expansion)

- 16GB or 32GB capacity modules (depending on your RAM capacity upgrade strategy)

- Populate only a Single Channel for 1333MT/s and Two Channels for 1066MT/s

- Maximum Slot Population is only 6 (!One DIMM Slot per Channel!) for 1333MT/s speeds

- Maximum Slot Population is only 12 (!Two DIMM Slots per Channel!) for 1066MT/s speeds

- Best Value for Capacity Recommendations for PowerEdge R710 DDR3 ECC RAM

- 1066MT/s modules

- Dual Rank modules (Quad Rank work with 2 channels maximum, not all three)

- 16GB or 32GB capacity modules (depending on your RAM capacity/speed needs and budget)

*Going Beyond Dell R710 DDR3 Buffered ECC DIMM Spec Sheet Recommended Maximum

The maximum RAM capacity possible for PowerEdge R710 is 288GB (18 x 16GB modules) according to Dell. However, according the multiple sources that are very knowledgeable, many have have tested and found by utilizing 12 x 32GB DDR3 Buffered ECC Registered Memory DIMM Modules, sometimes you can max out at the R710 at 384GB, assumably at 1066MT/s. As the costs for 32GB DDR3 ECC modules continue to drop, it may become more cost effective (dollar-per-GB) and performant (1066MT/s over 800MT/s) to leverage 12 modules at 32GB each (All 32GB

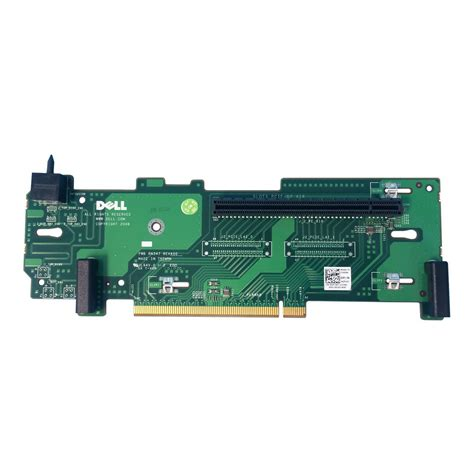

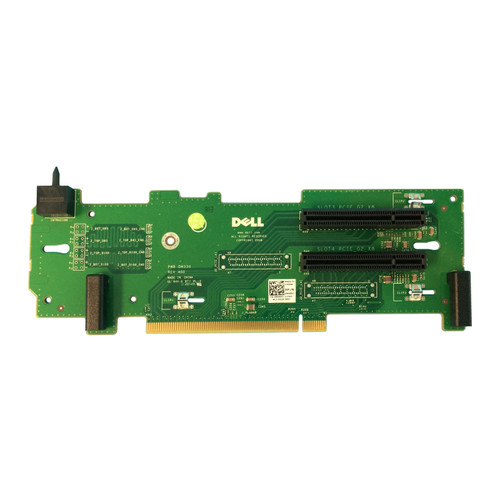

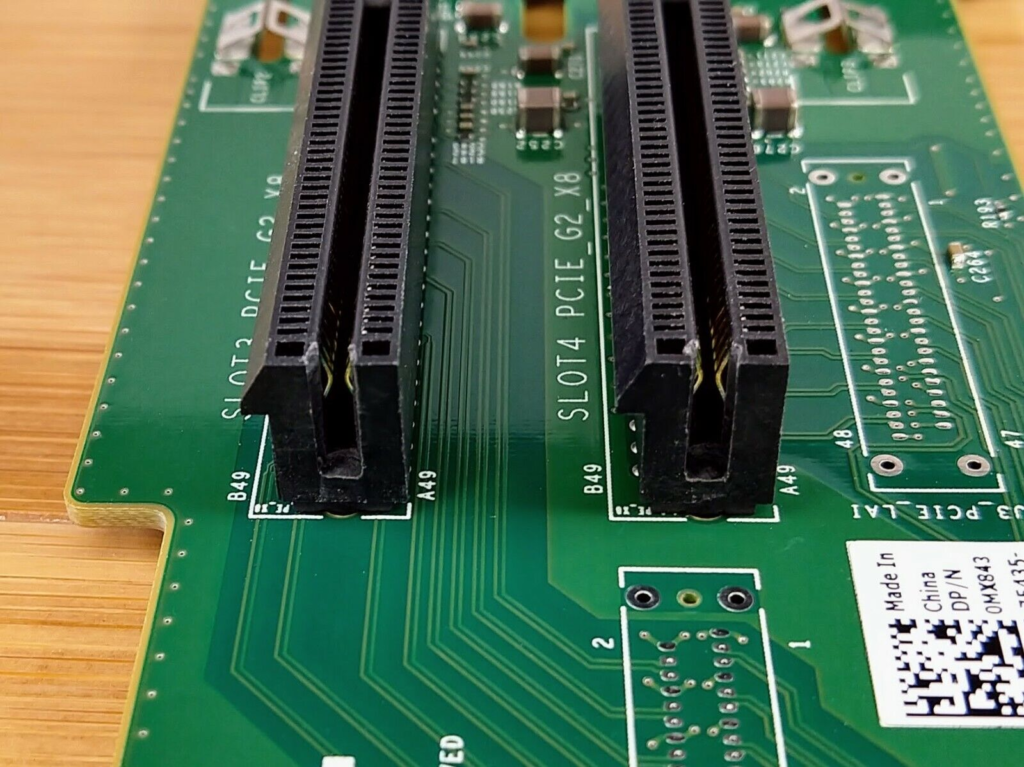

PowerEdge R710 PCIe 2.0 Riser Cards

- R710 Riser 1 Configurations

- 3 x PCI 2.0 Slot Riser Card

- One x8 PCI 2.0 (for Storage Controller to backplane)

- Two x4 PCI 2.0 slots

- 3 x PCI 2.0 Slot Riser Card

- R710 Riser 2 Configurations

- 2 x PCIe 2.0 Slot Riser

- 1 x PCIe 2.0 Slot Riser Card

Since the options for PCIe Riser 2 are so rare and hard-to-find (and therefore expensive) for the R710, it is recommended to work with the Riser 2 with two PCIe 2.0 8x slots. However, see the details about possibilities that are “unsupported” for a few “dangerous” options for modified Risers in the R710 if this is a big dealbreaker for your R710 system use case.

Compatible Parts Platforms for The Dell PowerEdge R710

There is no way that we can know EVERY compatibility caveat and component for the R710, but there tends to be a consistent trend for using certain brands and aftermarket components.

- Most Dell Storage Controllers that are PCIe 2.x are actually LSI / MegaRAID (now Broadcom) with Dell-specific firmware.

- LSI-branded Storage Controllers tend to have a plethora of options available that will work well with the Dell PowerEdge Platform

- Generic Branded Storage Controllers might work with some limitations.

- Since the R710 has 2 onboard SATA ports and bootable options for SD Cards and USB drives, you might be able to get crafty and use Clover to install a bootable system to lesser-known and obscure storage controllers

- Single Disk NVMe PCIe Adapter card, or built-in NVMe PCIe disk (which is technically not a “controller”)

- But keep in mind that you are still limited to 500MB/s per PCIe 2.0 lane, which means that only a maximum of 4 lanes could be used for NVMe drives, theoretically maxing out 2Gbps or 2000MB/s – This is because the R710 motherboard has not PCIe bifurcation options in the system BIOS or UEFI! So if you want an NVMe drive for your R710, you should use one of the PCIe x4 slots on Riser 1, and buy a lower cost PCIe 3.0 compatible NVMe drive with top speeds of less than 3000MB/s as to not waste money or capacity. Note again by using up the Internal USB port or SD Card boot default options, you could install and configure CloverBootloader (A custom boot manager) to allow booting from a PCIe-to-NVMe disk, though you will still be subject to slow boot times, due to the USB 2.0 Speeds of both of these portable media boot device configurations).

Dell PowerEdge R710 Storage Controller Options

The typical Dell R710 Server will have one of 3 possible Storage Controllers installed in the design CPIe 2.0 x8 slot of the Riser 1 (at the bottom) and be housed in a sort of “clip holder” that keeps the Storage Controller Card in-place (sort of…) and prevent “sagging” from gravity pulling down on the card as it rests in the slot. It’s not a terrible design, but it can be weird to work with at first. And on more than one occassion, when moving the chassis around, the card has “wiggled loose” juuuuuuuust barely enough to cause problems. So make sure you “clip and slide” the card completely in the grooves of the “housing” and the PCIe interface pins are firmly planted in the PCIe socket, as intended, anytime there is any kind of a “shock” to the R710 chassis.

- PERC 5/i for R710 (Dell P/N: 0MN985)

- NOPE. The best performance you will see from this card is roughly 500MB/s of data transfer across an 8 disk RAID 0 array. That is the equivalent of a single cheap SATA II SSD from the early 2000s. That likely means this card only EVER supported 250MB/s per channel (*HOT GARBAGE).

- It is a fun card to play around with an learn about cross-flashing Dell and LSI/MegaRAID BIOS configurations for adding/removing “IT Mode”, but otherwise its nearly useless. Don’t expect to get much out of all the time you will spend researching and hacking on this card, aside from knowledge and experience on how much the world of IT used to suck even harder than it does, now.

- You can get PERC 5/i cards cheap AF, and SAS/SATA breakout cables almost as cheap, too. The two SFF-8484 SAS connectors at the top to give a total of eight SATA or SAS hard drives. But there is a reason they are so cheap. NO ONE WANTS THE PERC 5/i.

- While there is the “tiny cable cable wire port” on this card, which is intended to link to the “Battery bay” in the center, above the storage backplane, under the “latch” of the R710 chassis, it will actually work without the battery, too. It might nag at you, but if you use singel disk RAID0 arrays/VDs for all of your drives, you won’t have any use for the battery “cache protector” purpose it serves, either. That said, without it, IDRAC6 will nag you forever…

- The battery connector cable and battery is intend to retain any data that was “mid flight” before an abrupt power failure, theoretically reducing the odds of important data corruption. If you DO use a multi-disk RAID array on the PERC 5/i, you will want the battery and cable working properly. The only good news is that if have this card with this battery, you could use it with a Dell H700 Storage Controller, too. That is literally the only upside to owning a PERC 5/i in 2025.

- There is a DDR2 cache module option on the PERC 5/i as well, but if you are using it in IT mode, it won’t matter, anyway. It barely matters even in a RAID configuration.

- Performance testing done by CoolElectricity for the PERC 5/i topped out around 550MB/s of an 8 x HDD Disk RAID0 Array, before the card overheated and started getting flakey. This is essentially the same as what OverClockers.com found in its benchmarks for the Dell PERC 5/i from 2010.

- The PERC 5/i is supposed to get a few CFMs of airflow across its heatsink, by design, so you might need to add a fan if you try to use/pre-configure this card on a desktop machine (which is possible, no hacking of the heatsink is needed – maybe some fresh thermal paste though).

- When the PERC 5/i is actually installed inside the R710, the fan assembly provides enough airflow for the heatsink not to overheat (assuming the R710 fans are working properly).

- Dell H330 Storage Controller for R710

- You don’t want this one, either. It’s very slow, and very old. There is no “onboard cache” for this model. It is reportedly intended to be used for RAID 1 mirror of OS Volumes, which means it is intended for use as a redundancy mechanism, should one of a set of 2,4,6 or 8 disks with an OS installed on them fail, there is a “mirrored copy” that seamlessly takes over (in theory… in practice is another story…) – TL;DR: This card was never designed for any kind of performance in mind, and it will always be slow and awful, unless its your only option and/or your hard drives are SATA I or early-SATA II (in which case, it just won’t matter what storage controller you use).

- This may be the worst option that is among “Dell Official Support” for the R710. Just don’t.

- This is one of the offcially supported-by-Dell Storage Controller cards for the R710 that CoolElectricity never acquired for testing, but given its specs and the immense negative feedback for its performance from others, no big loss.

- Do NOT get this “H330” confused with the far-superior “HBA330”, mentioned below.

- Dell HBA330 Storage Controller for R710 – Highly Sought After for ZFS

- This is a commonly sought after model that is the de-facto goldilocks standard of Official Dell Support for the R710 and recommended by the “ZFS Community” (Such as the very smart, talented and opinionated folks in the TrueNAS community). The reason why this Storage Controller is so recommended for ZFS, a much newer and far more advanced technology that aims to surpass the benefits of old-school RAID arrays, is there is absolutely no RAID function that this card is capable of. There is also no cache module and slots like on other storage controllers (which we will dive more into, below). This means that you get as-pure-as-possible signaling between the hard drives and the PCIe 2.0 Bus, without having to “fake” a single disk array or use a JBOD configuration, so that multiple drives appear to your chosen operating system (and hypervisors). If you want to appease the tech gods that comprise the ZFS Community, this is the card for you to use in your R710.

- Dell PERC 6/i for R710

- NOT Recommended. Basically useless except for some very rare edge cases. The PERC 6/i seems to complain even more than the PERC 5/i did about its “pwecious battewy” not being present or properly charged. Because of this annoyance, and its generally slow performance (albeit better than the PERC 5/i), it’s a pretty terrible option as a Storage Controller in 2025.

- This is a very common Storage Controller that you may find “stock installed” on many R710 models. It’s probably fine for the 4 x 3.5″ disk bay model, since you will likely never see faster performance than ~825MB/s if you used 4 x SATA II or III HDDs in a RAID0. But that’s probably the only configuration that the R710 has where it would make any sense.

- CoolElectricity performance tested the PERC 6/i with 8 x 2.5″ Enterprise SAS HDDs in the R710 and the peak transfer speed was limited to under ~825MB/s – Not adequate in 2025, and nearly half of what the Dell H700 controller provided for us (up next).

- The Dell PERC 6/i also suffers from the same Physical Disk size limitation that the PERC 5/i does. There is a reported 2TB maximum size per disk that both the PERC 5/i and PERC 6/i suffer from. Thankfully for our testing, we used smaller disks.

- The PERC 6/i has 3 known cache module options with the swappable/remove “chip” that looks somewhat similar to an oversized RAM Module, because it is. Its a DDR2 module that comes in the following options:

- NONE (hey, technically it counts.)

- 512MB of cache memory on-module

- 1GB of cache memory on-module

- The usefulness of this cache module is debatable. But if it’s present, I guess you may as well use it! – DYOR research as to whether or not a very early “version 1” of read-cache-but-no-write-cache option for “CacheCade V1.0” exists on your PERC 6/i when you mix HHDs and SSD drives (*not a recommendation, just a mention).

- Dell H700 for R710

- The Dell H700 is reportedly NOT able to be flashed for use in “IT Mode”, even if you are successful with flashing LSI firmware onto it. So this controller is best left stock (unless this has changed in the last few years?) – Since there is no known method of successfully flashing the Dell H700 into IT Mode to make it useful as a pass-through device for a ZFS configuration, we are sticking with RAID configurations with the Dell H700.Since there is no known method of successfully flashing the Dell H700 into IT Mode to make it useful as a pass-through device for a ZFS configuration, we are sticking with RAID configurations with the Dell H700.

- The Dell H700 Storage Controller is limited to 6TB of hard drive recognition. So if you are using hard drives larger than 6TB in an R710, you may not be able to “see” the whole drive (eg only 6TB of a 8TB drive will be “visible” for the H700 to use), or in some cases, the larger-than 6TB drives are simply not recognized by the Dell H700 at all. This translates to a maximum capacity of 48TB for The R710 with an 8 drive bay backplane.

- The Dell H700 has 3 known DDR2 RAM cache module options with the swappable/removeable “chip” :

- NONE

- 512MB of cache memory on-module

- 1GB of cache memory on-module

- The 1GB cache memory on-module version of the Dell H700 has a feature known as “CacheCade Pro v2.0”, which can offer users a trade-off between precious SSD storage space to be used as a “read and write cache” for Virtual Disks (VDs) comprised of HDD storage disks. This weird feature may or may not fit your use case, should it even be available at all for your specific model. See below for more details.

- The usefulness of “CacheCade” is well past its prime, as the intent was to provide a “cache” for the slower HDDs connected to the Dell H700 (up next) to leverage the greater speeds of connected SSDs in such a way that you might get dramatically improved read/write speeds to/from data stored on the HDD drives. While there is very little information about this old-timey “feature”, it was a thing. But now that SSDs are so much cheaper with greater speeds and capacities, plus the addition of NVMe and U.2/U.3 for “Server Platforms”, this feature is arguably pointless and obsolete. Particularly since ZFS and L2ARC Cache with NVMe disks is far superior to RAID + CacheCade. But the idea is similar, even if CacheCade never really added much benefit to real world benchmarks after HDDs reached speeds of over 200MB/s data transfer. Best-case benefits from CacheCade might be if you are using RAID 1 sets with HHDs and you use (or… sacrifice) an SSD for to speed up that RAID1 set, lightly. Is that worth the trade-off? You decide.

- CoolElectricty performance tested the H700 using the same 8 x Enterprise SAS HDDs as those used in our testing of the PERC 6/i with the same RAID0 configuration and we reached a peak data transfer rate of just over ~1500MB/s – Stunning? No. But adequate for the disk type used and the PCI 2.0 bus speed limitations. Just to be sure that this is the upper limit data transfer rate of the Dell H700 Storage Controller, we also tested 8 x SSD drives in a RAID 0 configuration and were able to confirm the same “speed limit” of ~1500MB/s.

- Since the H700 is a PCIe 2.0 card with x8 lanes, and each PCIe 2.0 lane is theoretically capable of 500MB/s, and each SSD used in the testing is capable of just over 500MB/s in a single drive configuration, we were hoping to see something closer to 4000MB/s (8 x 500MB/s), or at least half of that speed. But NOPE. The H700 appears to be limited to 1500MB/s – a consideration based on your specific R710 backplane (4, 6 or 8 bay).

- Additionally, we also tested a 4 x disk RAID 0 array with the same SAS HDD disks on a single channel, and got the same “speed limit” of 1500MB/s – so this speed limitation seems to be for the entire H700 Storage Controller card, rather than having single channel speed limitations. Weird for 2025, but this was most likely due to costs for expensive CPU and RAM from the early 2010s, where the limiting factors of the card are not the bus speeds, but the performance efficiency and costs for resources needed to saturate the channel buses. Back then, hard drives weren’t nearly as fast as they are in 2025, and Cloud Backups weren’t a thing yet. So RAID 0, 5, 6, 10, 50 and 60 (parity) were FAR more popular, but also had a lower maximum for data transfer speeds.

- There could also be something afoot with the way the Storage Controller “divides” its resources, too. ~1500MB/s was just the speed that a single VM reported as its average for sequential read/write. While using fio and hdparm to test, the averages seem much higher on the single channel with 4k block sizes, which report extremely slow on CrystalDiskMark from the Windows VM:

- #fio test read only:

sudo fio --filename=/dev/<specify drive to test here> --direct=1 --rw=randread --bs=4k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 --readonly

### results go below, after further testing ### - #hdparm test string:

sudo hdparm -Tt /dev/<specify drive to test here>

### results go below, after further testing ###Timing cached reads: 16440 MB in 1.97 seconds = 8360.88 MB/sec

Timing buffered disk reads: 2642 MB in 3.00 seconds = 880.08 MB/sec - All that said, using multiple smaller sets of RAID0 arrays (or RAID5/6 or RAID10 parity) could help balance out the H700 Storage Controller speed limitations and spread out the workload across the smaller sets for marginally better overall performance and better data resilience and safety. So the Dell H700 isn’t totally obsolete in 2025, (but it is certainly living its best Golden Years before retirement).

- #fio test read only:

- There could also be something afoot with the way the Storage Controller “divides” its resources, too. ~1500MB/s was just the speed that a single VM reported as its average for sequential read/write. While using fio and hdparm to test, the averages seem much higher on the single channel with 4k block sizes, which report extremely slow on CrystalDiskMark from the Windows VM:

- Additionally, we also tested a 4 x disk RAID 0 array with the same SAS HDD disks on a single channel, and got the same “speed limit” of 1500MB/s – so this speed limitation seems to be for the entire H700 Storage Controller card, rather than having single channel speed limitations. Weird for 2025, but this was most likely due to costs for expensive CPU and RAM from the early 2010s, where the limiting factors of the card are not the bus speeds, but the performance efficiency and costs for resources needed to saturate the channel buses. Back then, hard drives weren’t nearly as fast as they are in 2025, and Cloud Backups weren’t a thing yet. So RAID 0, 5, 6, 10, 50 and 60 (parity) were FAR more popular, but also had a lower maximum for data transfer speeds.

Storage Controller Tools for Compatible Storage Controllers with Dell R710

- Perccli (Command Line for Linux)

- It isn’t pretty but it might work. It might help with a few things that you can’t “see” from the running Operating System with them.

- Dell Support download page for Perccli (Linux)

- Storcli

- This LSI / Broadcom interface might need to have LSI firmware flashed to the device to be completely useful. DYOR and YMMV.

- #TODO: megacli/megacli64?

- #other?

Working with Storage Controllers and PCIe Adapters in Unsupported Configurations for ZFS Pools, NVMe and Unsupported Boot Devices in Dell R710

Since we are limited to a total of three PCIe 2.0 x8 slots, including the one used by the Storage Controller on Riser 1 and two PCIe x8 slots on Riser 2, it might be possible to achieve

How to achieve

If you are limited by the available Storage Controller options for the R710, like many others, so that you can try using different storage configurations and potentially leverage any performance benefits of ZFS, but are willing to risk data loss and/or data corruption, there are some high unrecommended ways to squeeze more performance from the aging R710. While you will be shunned and chastised by the ZFS community for doing such things, they may have a use case for you, assuming you have a proper backup strategy in place for your data, and you are aware of and ok with the potential for data corruption and data loss.

Do you live on the edge?

Well, ok then.

If you still REALLY want to use ZFS on a card that does not support IT Mode (or JBOD) in any way shape or form (such as the PERC 6/i and the H700), then it IS possible to configure each individual disk as its own RAID0 array “Virtual Disk (VD)” (which is silly, but doable). Keep in mind that “doing this way will not provide the full data integrity benefits possible by ZFS”. You are making a tradeoff for added performance at the risk of data loss.

Once you configure your “single disk arrays” in RAID0, the disks will show individually to the operating system, and can then be configured for use with something like ZFS (or even used in “Storage Spaces” on Windows, if that’s your thing).

How to Get a Bootable NVMe disk on The Dell PowerEdge R710 (Hacky… But It Works!)

There was an excellent write-up done here about this subject, which seems to be able to work with the R710 as well: https://tachytelic.net/2020/10/dell-poweredge-install-boot-pci-nvme

Here are the most basic highlights of how to get an NVMe device on your system:

- Find a compatible NVMe to PCIe 3.0+ x4 lane adapter card design that will work with the chipset on the R710 by being totally backwards compatible with PCIe 2.0 x4

- BE VERY LEARY of ANY PCIe adapter that is under $10 USD brand new! Many of these extremely cheap PCIe adapters for NVMe only work with more recent chipsets! That isn’t what the R710 is, in 2025! You will likely be better off spending around $20-25 on a more compatible, higher quality PCIe 3.0 x4 adapter the supports 1 NVMe drive

- Do NOT try to use a 2 x disk or 4 x disk NVMe PCIe adapter with x8 or x16 lanes in the R710!!! *IT WILL NOT WORK.

- Ok, so MAYBE it *could* work in some edge cases… but, there are some major caveats:

- Assume this probably will not work if you try to use a multi-disk NVMe-to-PCIe adapter. We are not trying to get your hopes up.

- IF you perhaps already own a multi-disk NVMe adapter that has either a x4 or x8 PCIe lane design, and you only populate ONE of the NVMe drive slots with an NVMe disk in the “1st position”, then you MIGHT be able to get the NVMe disk to be recognized by your system, assuming you have NVMe drivers that work with your NVMe disk and Operating System.

- Also MAYBE if you spend the small fortune on a “No Bifurcation Support Required” rarity of a PCIe to NVMe adapter, this *might* (still probably not, tho) work for your R710. But EVEN IF IT DID, you would HAVE to use one of the two x8 PCIe 2.0 slots on Riser 2, and even STILL you would only see 2 NVMe disks at the most, ever.

- Ok, so MAYBE it *could* work in some edge cases… but, there are some major caveats:

You (Most Probably) Do Not Have A Good Reason To Boot From NVMe or a ZFS Pool

There. We said it.

It’s one thing to get the correct type of PCIe-to-NVMe Adapter that works on the R710, or perhaps just buying a PCIe NVMe drive (as in, the type of NVMe drive that uses an x4 or x8 PCIe interface connection, no adapters required), but then its another thing to actually get the NVMe drives to be bootable.

Will having an NVMe boot disk speed up boot times for the R710?

It will… buuuuuuuuut…. Probably not by more than a 5-10% percent in overall boot time.

Why is that, you ask?

Boot times are RIDICULOUSLY long for the R710, by the standards of 2025. But most of the boot time comes from the PowerEdge loading all the component configurations an taking its inventory to report to IDRAC and The Lifecycle Controller. Combine that with the “Memory Configurator” operation at the top of each boot cycle and the slow-for-2025+ PCIe 2.0 bus speeds, the R710 is just never going to have fast boot times. It never did. It never will.

For example you are running XCP-ng as a hypervisor, you might see a full minute or three shaved off of a 5+ minute boot time to a useable Guest VM login prompt if you are moving from a single HDD boot device to an NVMe boot device. But in the grand scheme of things, that’s not really that big of a deal. This is operating under the assumption that you will be using your R710 as it was intended and designed to be used: As a SERVER platform.

If you want a desktop PC, then buy a desktop PC. But that’s not what an R710 is, or ever will be.

Furthermore, hypervisors like XCP-ng and ProxMox tend to write ENORMOUS amounts of logging to the OS partitions, by default. So you are essentially burning up your NVMe disk with logs from the operating system rather than using the value NVMe capacity to improve your database read and write performance, increase responsiveness to your RDP sessions into GUI OSes for gaming or similarly browsing the web.

- Note that there are some tricky ways to change the default logging directories/partitions for a hypervisor, though. So if you are so committed to the idea of using a bootable NVMe or ZFS setup because you have an ACTUAL valid use case to do so (you probably don’t…), then do some digging and sort out how to log your OS out to a more durable disk so that you don’t burn up your fast-and-expensive storage devices.

But, again, the R710 was never meant to be rebooted frequently. It was designed to stay powered on literally FOREVER. Redundant Power Supplies that are hot-swappable, removable drive trays in the front, IDRAC6 accessibility totally independent of the R710 being booted, a dedicated RJ45 port for IDRAC6, even. So many features just scream: “I never need to be turned off, EVER!”

Your best bet, in the strong opinion of the team members at CoolElectricy, is to use NVMe for use cases where you need fast and responsive actions to improve User Experience in apps you’ve deployed, or specific tasks like Video/Audio transcoding and/or storage used for streaming media services. Does your application have a large and heavily used database? Having a Read-Only copy of your database that gets accessed by your application is a FANTASTIC use case for NVMe. Does your web server (eg nginx) need to load pages and artifacts quickly because somethings can’t be loaded asynchronously with errors? Also a valid use of NVMe disk space.

What you really want, is a way to READ faster, and use as little WRITES as possible, which will extend the life of your NVMe drives, exponentially. So design your systems with that in mind, or keep dumping money into new NVMe drives and having long downtimes while you wait on the parts deliveries.

How to Configure ZFS With Dell PowerEdge R710 Storage

Linux, BSD and other similar (often obscure) Operating System families or distros are where you will find the ZFS file system. It’s pretty great. This guide will not even come close to delving into all the nuances and tricky configurations that are available with ZFS (eg sync, ZIL logs, L2ARC caching, zpool tuning, zpool strategies, etc), but we will touch on the basics, briefly.

XCP-ng is a popular Hypervisor that has support for ZFS. Here is an extremely basic primer on how to get ZFS going on XCP-ng by only using the command line – https://xcp-ng.org/docs/storage.html#storage-types

ProxMox is probably even more popular, despite its lack of production grade status for its open source “free” offering. It has ZFS support, too – https://pve.proxmox.com/wiki/ZFS_on_Linux

Oracle has some useful docs about how to get ZFS going with zpools and other technical details – https://docs.oracle.com/cd/E36784_01/html/E36835/gaynr.html

While TrueNAS obfuscates away from the command line ZFS operations a bit, there is a fair amount of technical details about various ZFS parameters found here – https://www.truenas.com/zfs/

Lawrence Systems has a very solid forum and YouTube Channel where they cover a decent amount of ZFS use cases – https://forums.lawrencesystems.com/t/local-zfs-on-xcp-ng/19453/2

Level1Techs goes quite a bit deeper than just about everyone else on subjects like ZFS and hardware configurations – https://forum.level1techs.com/t/zfs-guide-for-starters-and-advanced-users-concepts-pool-config-tuning-troubleshooting/196035/40?page=2

Basic rules of thumb for ZFS users that might already be familiar with RAID:

ZFS works far better with direct disk access, instead of using “tricks” like single disk RAID0 arrays or the like when connected to a Storage Controller with primarily RAID functions. What ZFS likes most, is HBA controllers that are purely IT-mode only, or JBOD without being configured as a Virtual Disk.

ZFS can perform much better with “mixed disks” than RAID can, though its still usually a good idea to use matching disks, rather than just matching capacities of partitions. But you do you.

ZFS caching functions are profoundly superior for flexibility, performance and data integrity/safety than RAID (most of the time). But with all of the benefits of ZFS comes a cost of RAM for ARC (Usually 16GB will work, but for larger zpool capacities over 14TB and multiple vdevs, you might want to consider bumping that number up to +2GB of RAM equal to the number of Terabytes in your zpools. This is not a hard and fast rule, but a generic, one-size-fits-most type of common knowledge suggestion that you hear often from ZFS enthusiasts. Who knew a file system could get people enthusiastic? It really is that good, though.

Quickie ZFS tut:

Assumption: You are running Ubuntu Linux. You have 2 disks that you want to use in your zfs zpool and know where they are in your list of drives shown under the lsblk command. Lets assuming they are drives /dev/sda and /dev/sdb and create a basic, unoptimized stripe zpool named “tank”, where a striped zpool is similar to a RAID0. We mount this pool at /mnt/zfs. Let’s go:

sudo apt install zfs -y # install zfs

sudo modprobe zfs # load zfs into your OS kernel

sudo zpool create -f -o ashift=12 -m /mnt/zfs tank /dev/sda /dev/sdb # create 2 disk striped zpool “tank”

zpool list # you should see your zpool “tank” in the list of zpools

#BONUS OPTION: disable sync for a performance gainsudo zfs set sync=disabled tank # performance boost, at the cost of not syncing

#Did you mess something up or need to start completely over and delete the zpool (and all of its data!!)?

zpool destroy -f tank #USE VERY CAREFULLY!

CoolElectricity Recommendations for Dell Supported Storage Controllers in The Dell PowerEdge R710 Server

Since the “Best We Can Do” from “Dell, Official” in an R710 is the Dell H700 Storage Controller, with a real-world benchmark maximum of ~1500MB/s Read/Write from an 8 disk RAID0 array, we can recommend using SAS HHDs or Consumer HHDs in sets of 2, 4 or 6 for RAID0, but not recommended for an 8 disk RAID0 set, like we tested.

Just How Unscientific-as-Possible was The Dell H700 Performance Tests CE Used?

For the record, we tested the maximum ~1500MB/s Read and Write speeds on the H700 by using 3 different Windows 11 VMs in an XCP-ng Storage Repository (SR) that was otherwise unused (different boot disk and multiple SRs).

The disks used in testing were a mix of matching (ancient) Dell-official <2TB SAS HDDs and cheap consumer grade <2TB SATA III SSD disks. We will assume a maximum range of 200 – ~300MB/s with each SAS HDD and a maximum of ~500MB/s with each consumer SATA III SSD.

CE performed its performance testing by using CrystalDiskMark on all 3 VMs with 50GB of space allocated for each Win11 VM .vhdx, all on the same SR, with 32MB x 5 test sets. The peak speeds of near ~1500MB/s average for SEQ1M Read and saw similar maximums for Write. We did dozens of test set runs. We tested in multiple ways, to create what a real-world usage scenario might “feel” like for the UX.

Even while all 3 tests were running, each VM was reasonably responsive and it was still possible to open Firefox and browse the web while all 3 Windows VM copies were running CrystalDiskMark. While there was a noticeable slow-down, it wasn’t so dramatic that it was unusable. Most of the time, each 5 x 32MB test set average for SEQ1M results were still over 600MB/s. That’s still roughly 5-15% faster faster than most single SSDs.

So while the complexity of setting up the H700 might scare off most normies, the testing we used was more to demonstrate how it actually “feels” to use the VMs while other intensive disk activities are occurring in other “headless VMs”.

It really wasn’t too shabby, really. So mitigating the potential for inconsistent performance bottlenecks due to disk loads could be done by breaking apart the 8 disk array into 2 or more sets, so that certain VMs that are not used with GUIs (eg. DNS Servers, NFS/SMB Servers, Docker Services, etc) reside on different Storage Repositories than those that are. Food for thought!

In light of our unscientific experiments, we will also recommend using RAID10, RAID50, RAID5 or RAID6 with HHDs (smaller than 2TB each), based on the requirements for the use case. If you are using extremely old 3TB Enterprise HDD drives that you found for a good deal, this might also be a viable option for your, as there will be a “natural overprovisioning” in this scenario, and should you ever move the disks or upgrade to a different storage controller, you could use the space that way.

RAID60 requires 8 disks, so this option only makes sense for the R710 models with an 8 x 2.5″ drive bay chassis.

If you want to use SSDs, we can only recommend sets of 2 or 3 in RAID0 configurations, or up to 8 disks in any “parity” RAID type (5, 6, 10, 50, 60). But parity configurations for SSD RAID sets are kind of a waste of perfectly good (and more expensive) SSD space. This might be a viable option if you have cheap or slower/older SSDs, such as used and retired Enterprise SSD drives.

See the amazing Raid-Calculator.com tool to play around with what RAID configuration works best for your specific use case. Take note of the Read and Write “x” factors for the various RAID possibilities of your specific hard drive and backplane configurations.

Using SSDs for the CacheCade feature is starting to make more sense now, though, right? For example, you might use a 3 x HDD disk RAID0 with a 1 x SSD disk RAID0 CacheCade Virtual Drive (VD) for maximum speed and capacity balance PER CHANNEL (4 disks per channel in an 8 bay R710, 3 disks per channel in the 6 bay, etc). But this doesn’t make sense for every use case, either. You might be better off NOT using CacheCade and using the same 2 set configuration for 2 difference RAID0 Storage Repositories, rather than sacrificing your preciously fast SSD drive space to attempt to speed up that which you already know is fairly slow or pushing the H700 to its ~1500MB/s limit with each of your 2 RAID0 sets.

Play around with it. Find what works best for you.

- Note the According to Dell: “The CacheCade is supported only on Dell PERC H700 and H800 controllers with 1 GB NVRAM and firmware version 7.2 or later, and PERC H710, H710P, and H810.” – https://www.dell.com/support/kbdoc/en-us/000136940/measuring-performance-on-ssds-solid-state-drives-and-cachecade-virtual-disks

We at CoolElectricity prefer cost-to-performance-and-capacity with a proper backup strategy in place, rather than an absolute minimum downtime and (much) lower-than-ours risk tolerance for data loss. So we do not recommend using CacheCade, as the cost-of-performance-per-byte-of-storage benefits are just not there, since, by design, it takes an already more expensive and faster data disk medium (SSDs) and slows it down to bring up the speeds of slower HDDs while sacrificing the faster SSD capacity and adding even more complexity. But some other mission critical services may not be able to afford downtime (eg. Hospital Critical Care Units, Financial Markets/Services, etc). Does anyone’s livelihood, mental or physical health depend on this data? For these nuanced reasons, you will have to decide what your trade-offs will be for your system.

Using later model Dell Storage Controllers Designed for Newer PowerEdge R7XX Models in The R710

Using a later model HBA capable Storage Controller that was designed for an R720/R720xd,R730/R730xd just might be the way to go in order to max out the x8 PCIe 2.0 lanes for the storage controller slot at the bottom of Riser 1 (or as a 2nd storage controller in Riser 2) and backlanes of the R710 is most likely the highest odds of working more smoothly.

Dell H730P is confirmed to work well in the R710 by Spiceworks members, here: https://community.spiceworks.com/t/dell-h730-in-an-r710-revisited-what-case-modification-is-needed/805185/3

Since a Dell H730P works, then the H730 will most likely work also.

BUT, the caveat here, is that this Storage Controller was manufactured after the “Dell R710 Storage Controller bracket holder clippy thingy” was designed, so it may or may not fit properly.

Furthermore, the lone PCIe 2.0 x8 slot on Riser 1 has “Dell protections” that prevent any unexpected Storage Controller from working on it “out of the box”. So chances are, you will have to use one of the 2 PCIe 2.0 x8 slots on Riser 2 instead

See here for someone’s experience trying to install the Dell H730 in an R710 (SPOILER “Invalid PCIe card in the Internal Storage slot!” – but read on) – https://community.spiceworks.com/t/dell-h730-in-an-r710-revisited-what-case-modification-is-needed/805185

The Art of Server for the win AGAIN! – https://www.youtube.com/watch?v=v0AEHVdc_go

Basically, Dell tries to “block” that one line PCIe 2.0 x8 port on Riser 1 to only use the “Dell Supported R710 storage controllers” that we did a deep dive on, up above. But you can hack this, if you need to for some reason (This trick is EPIC, thank you to The Art of Server AGAIN!)

We could try this same trick with a different Storage Controller, SO KEEP IN MIND THIS IS STILL NOT THE MAGIC BULLET SOLUTION, but it does give us some hope where it may have been lost before. The card The Art of Server was using in the video was a Dell H200 with LSI firmware flashed to it. The firmware flash mistaken set the card as having an “external” configuration, which Dell takes exception to having in that bottom x8 PCIe port of Riser 1.

Maybe this will be helpful to you:

~]# grep H730 /usr/share/hwdata/pci.ids

1028 1f42 PERC H730P Adapter

1028 1f43 PERC H730 Adapter

1028 1f47 PERC H730P Mini

1028 1f48 PERC H730P Mini (for blades)

1028 1f49 PERC H730 Mini

1028 1f4a PERC H730 Mini (for blades)

1028 1f4f PERC H730P Slim

1028 1fd1 PERC H730P MX

Collection of Details for Non-Dell Branded or Supported Storage Controllers in The R710 with PCIe 3.0 or Greater, With Backward Compatibility to PCI 2.0

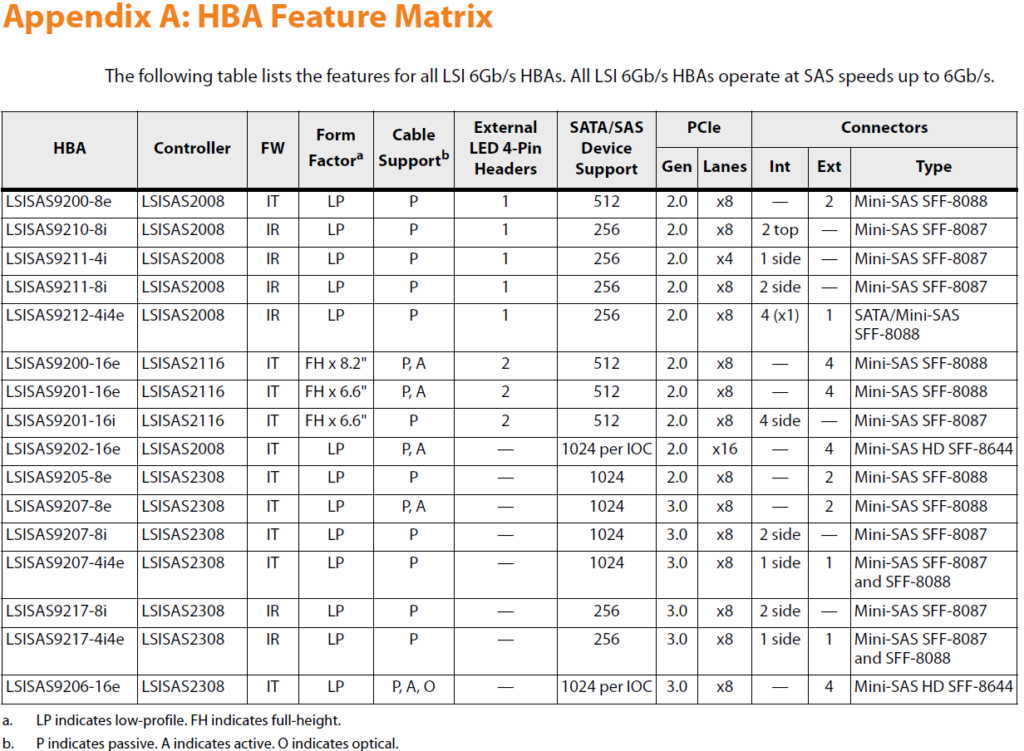

You will CERTAINLY be better off spending a few extra bucks on an LSI / Broadcom PCIe 3.0 x8 lane Storage Controller that is a “pure HBA” (in that there are zero RAID functions for it). While you won’t get the PCIe 3.0 theoretical speeds for the card, you will likely see a full saturation of the PCIe 2.0 x8 lanes in the R710 (assuming the proper Riser 1 x8 slot, or any of the Riser 2 x8 slots are used). You still are unlikely to see the full theoretical 4000MB/s for any configuration, but you will likely get much closer than the ~1500MB/s maximum H700 provides you.

Since LSI / Broadcom made SO MANY different models of Storage Controller cards (and there are other brands/models that are possibly R710 compatible), we can’t provide any specific details on which would work best. (And we don’t have any more Storage Controllers with proper SAS backplane cables that we can try in our R710, either… *shrug*)

ZFS with a high performance HBA Storage Controller using only SSD drives is the way to go, if you can sort it out. In this configuration, you will find the upper threshold for disk throughput on your specific R710 backplane.

Since it would probably be almost impossible to list EVERYTHING that has or has not worked from the LSI or other branded controller storage adapters, we will focus on the lowest cost and highest performing options, which may or may not work for your specific situation.

Here is a very generic starting point, this chart that is included in the following link and image will show you a limited list of possible options – https://www.servethehome.com/current-lsi-hba-controller-features-compared/

Here is a great example tutorial of how to flash whatever variant of an LSISAS2308 controller (The Art of Server comes through for us again!) THESE INSTRUCTIONS ARE ONLY SIMILAR, YOUR CARD WILL BE DIFFERENT BUT THE PROCESS IS VERY SIMILAR AND LINKED FOR DEMONSTRATION ONLY TO HELP YOUI LEARN WHAT IS INVOLVED IN A FIRMWARE FLASH. HOPEFULLY THIS WILL SCARE YOU AWAY INTO JUST BUYING THE HBA VERSION! – https://www.youtube.com/watch?v=CDatT8fn9KQ

- NOTE that this video shows how to flash IT mode to a “2208” with the “2308 IT Mode” firmware variant of the similar controller – This is rather advanced move! The Art of Server is a total PRO. Just like he mentions in the video, this is NOT a recommended path forward. (Watch all the way to the end!)

So you need to understand from this chart, that you basically have two good HBA options for the R710 in 2025, and both are using the same Controller, and both have variants with IR (That is the RAID firmware that you want to NOT use, if you want IT mode for use with ZFS) and since there is ALSO an IT mode variant of the same Controller and HBAs, that equates to: “its possible to get IT Mode on the LSISAS2308 Controller chip!” – This is the issue with the Dell H700 – there is no “HBA version in IT Mode”, but there totally could have been. LSI and/or Dell just didn’t bother with making 2 different firmwares or manufacturing a non-RAID/IT mode-only version, where as LSI made many (MANY) variants of the LSISAS2308.

Do you know which two it is? Can you see them? Narrow it down, look for the PCIe 3.0 types at the bottom. You want the PCI2 3.0 model because it has fast enough speed/performance that the PCIe 2.0 bus of the R710 will be maxed out and saturated, even if the controller had slightly higher capabilities when installed in a PCIe 3.0 bus (which the R710 doesn’t have).

Now look for the HBAs with only “i” and no “e” unless you want your hard drives to be “e”xternal and not “i”nternal on your R710 backplane.

- HBA LSISAS9207-8i

- HBA LSISAS9217-8i (might work, might not)

Has anyone reported that the LSISAS9217-8i works in an R710?

Yahtzee! According the LSI (Now Broadcom), it appears to be doable – https://www.broadcom.com/support/knowledgebase/1211161500727/lsi-sas-9207—9217-firmware

Click the links from the document above for the 9217 and 9207 LSI firmware

List of LSI 9207-8i Controllers and Variants – PCIe

If you can find a version that is ALREADY an HBA or has been properly/correctly flashed for IT mode, you are going to have a far more pleasant time.

Yes, variants of the 9207-8i can be flashed to IT mode, but since there are so many variants of this card that were made for so many systems, you might have a hard time figuring out that some PCs will have a smoother time than others while trying to flash different firmware.

LSI 9204? variants (untested, and scattered info – probably won’t show in LCC as bootable, but maybe?)

- IBM M5110

- Has IT Mode capabilities, but is sometimes difficult to Flash to IT mode

- https://lazymocha.com/blog/2020/06/05/cross-flash-ibm-servraid-m5110-and-h1110-to-it-hba-mode/

- Extended info: https://forums.servethehome.com/index.php?threads/some-additions-to-the-m5110-it-mode-flash-guide.39649/

- FUN FACT! This IBM M5110 controller has a tiny speaker that will FREAK OUT when you flash IT mode to a RAID (IR mode) controller card (LOL!); So… you will have to physically remove/disconnect this “alarm speaker”, somehow to get it to shut up. But, on the upside, you’ll know when you’re successful Flashing it to IT mode! …

- You CAN find IBM M5110 controllers that have “Pure HBA” configurations

- Look for the LACK of the RAM module used for RAID caching if you are committed to IT/HBA only (and don’t feel like fighting firmware flashes, ever – because you refuse to lower yourself to ever use RAID)

- Has IT Mode capabilities, but is sometimes difficult to Flash to IT mode

Using ZFS With The Dell PowerEdge R710

If you figured out a path forward for how to achieve the benefit of using ZFS in the R710, perhaps with an aftermarket HBA Controller that is over-spec (eg x8 PCI 3.0 Storage Controller with 16 ports and a SATA power mod), then it’s relatively smooth to set ZFS up as virtual storage. Due to the complexities and possible drawbacks of using ZFS as a boot device, we will not cover that topic here (Isn’t this R710 series already long enough??). But there are some options to achieving that, which others will be more motivated and knowledgeable on. (“But why, tho?”, is what we at CE would ask. Booting from one of the available onboard SATA II ports works just fine for speeds up to 500MB/s. Booting with the internal USB Port works, too. Booting from the SD Card could work… Using Clover to boot from a NVMe-enabled PCIe x4 card/adapter could work… Why use a ZFS Pool for your boot device??)

- #TODOs:

- Explain basic ZFS tank/pool creation

- Explain pool / disk separations

- Explain caching with ZFS

- Logging/Sync

- Block sizing

- Get cray and talk about mixing Hardware RAID with ZFS to significantly increase write-speed at the risk of losing all of your data in a single disk failure! YeA, PeRfOrMaNcE!

Using Unsupported Storage Controllers and Configurations in The Dell PowerEdge R710

Spiceworks Forums have a plethora of older information retained within them that include multitudes of knowledge from Experts and Dell Representatives. See here for a prime example of how Dell Representatives and other experts chime in together to make the internet a better place by answering such nuanced questions like “What controller to use in Dell R710”.

Keeping The Dell PowerEdge R710 Server Firmware, Drivers and Utilities Completely Up To Date, in 2025

Some time in the early 2020s, Dell seems to have completely removed support for the R710 from the typical built-in tools and formerly recommended that you might find for updating the R710 with ease. Dell has not been kind to R710 owners in doing this, as there are mountains and volumes of details on you to use the Lifecycle Controller and “Dell OpenManage” flavors to maintain up-to-date firmware that maximized R710 hardware compatibility, performance, security and features.

First, Dell changed the FTP address for its catalog from “ftp.dell.com” to “downloads.dell.com”, and that was ok… But then, Dell dropped the R710 from auto-upgradable models for downloads.dell.com. Not awesome.

Then, where it used to be possible to use wget from a command-line to download the resources listed under the Dell Support page for the PowerEdge R710, it is now required to use a browser (or… fake it with an -A(gent) parameter

- Dell EMC OpenManage Server Administrator Managed Node for Windows (OMSA)

- Version 9.3.0 is listed in the Dell PowerEdge R710 Support page as “the latest version”, plus a few patches that are marked as “urgent”.

- This hot mess of software seems to install ok on Windows 11, but as soon as you attempt to pull in any info on the R710, it quickly vanishes and/or fails. Also, if you say “yes” to the recommended upgrades to newer versions, there is some kind of a permissions bug that Dell Support just seems absolutely SILENT about in the Support Forums. It’s quite strange.

- While testing this software out for other Dell PowerEdge R-series Servers, it DOES seem to pull in all the “Catalog” for Dell Updates that can be applied in a lot of confusing paths that mostly do not work for the R710 in 2025 and beyond.

- It’s very peculiar that Dell keeps the Drivers, Utilities and documentation up-to-date on the R710 Support Page, but the tools it seems to add either no longer work, or never did. It’s extraordinarily confusing to make sense of how to make sure you have all of your firmware for the R710 as up to date as possible, in 2025. In years past, there were a number of relatively easy and obvious ways to ensure that the firmware and BIOS versions were as updated as possible, such as through the Lifecycle Controller or System Management options at boot time, but those options are either gone, broken or simply never worked properly in the first place. It’s very tough to navigate and make sense of.

- For example, in the Lifecycle Controller menu options, entered at boot time with the F10 key, a pre-populated form with the address of ftp.dell.com (this address no longer works!) was present for users to simply click “Next” and viola! The system could reach out to the public internet and download all of the most recent firmware, drivers, etc and install it all automatically, without ever even having to touch an actual Operating System. Why take these options away, when the Dell R710 is still one of the most popular Enterprise Server chassis of all time? It’s extremely odd.

- Also, the Windows Desktop tools seem to have had “automatic downloading support” stripped away from them for the R710, as well. If you can cobble together all of the files for firmware, drivers, utilities, etc on your own, and get the included catalog files and *.sign files, then you can re-create the ftp.dell.com server yourself and reference the locally hosted address in the post-boot F10 menu options to “fake” what Dell used to provide for R710 Server Admins, but that is an extraordinarily tedious task. Re-creating this “repository catalog” would also work with the OSMA, also, assuming you install the version listed in the R710 support downloads section and don’t update it to the broken newer versions.

- WHAAAAAAAT a hot mess, Dell.

- Dell OpenManage Enterprise Virtual Appliance (OME in a VM)

- #TODO: While this is a nifty, powerful tool, its also pretty janky and an ENORMOUS resource hog. In our initial testing, it seemed to need at least 16GB of RAM and 4 vCPUs to work properly. It has a TON of features that can be used to monitor, upgrade, update and maintain Dell Products, and a few others. More dabbling is needed to write-up an intelligently crafted review of its capabilities.

- The 3 Options for Hypervisors that support OME pre-configured images

- Hyper-V (Windows 11 Pro or Windows Server)

- ESXi (VMware)

- KVM (libvirt, ProxMox, xcp-ng, etc)

Working With HBA’s and Flashing Dell Storage Controllers with LSI / Broadcom Firmware for ZFS

WORK IN PROGRESS: A Collection of Reference Materials for How to Cross-Flash Dell Storage Controllers with MegaRAID / LSI / Broadcom BIOS for Various Storage Controllers to Leverage IT Mode with the Intention of Using ZFS in its Purest Form so that Disk Info Gets Maximized for ZFS Pool Stability and Performance

(Woooooooo, say that 3 times fast.)

Power Supply Options for The Dell PowerEdge R710 Chassis

- 570W

- The 570W Power Supply might be ok if you are using lower TPD Watt CPUs that have a lower energy demand, and only a single channel of RAM. You will also need to consider how many drives you are powering with your particular Backplane and PCIe Storage Controller (For example, 8 x SSDs have a lower power draw). But in 2025, chances are, you’re going to need more power if you intend to “max out” any aspect of the R710 in terms of performance and functionality.

- 870W

- If you are using all 3 Memory Channels and both CPUs (over 12 memory modules), you are going to need to use the 870W Power Supply. Full Stop.

- There may be some RAM configurations that support a lower wattage, but from experience, pushing a server platforms’ power limits never ends well.

- If you have the budget for the RAM, make sure you leave some room on your credit limit or bank account balance for a pair of 870W Power Supplies for the R710.

- 1100W (Not a Dell Supported Configuration, But Reportedly Possible!)

- There appears to be a number of different Part Numbers for different Dell devices (T710 and/or R910) that have power supplies that use the same form factor as the R710, and it is therefor possible to use them as 1100W power supplies for the R710.

- 03MJJP, F6V5T, 0TCVRR, 01Y45R, 1Y45R, PS-2112-2D1, L1100A-S0, ps-2112-2d1-lf

- D1100E-S0

- While an 1100W PSU is more than likely over kill for nearly every “supported standard system” upgrade possible, you may have an edge case where using 4 GPUs that require additional power (in tandem with the physically modded Riser 1 and Riser 2 ports, as discussed below) or you are using multiple Storage Controllers to add more storage and bigger/faster/stronger/louder cooling fans are added/upgraded. In such an edge case, or, it is is (near) equally cost effective to buy an 1100W power supply that fits the R710, this option would be the way to go.

- There appears to be a number of different Part Numbers for different Dell devices (T710 and/or R910) that have power supplies that use the same form factor as the R710, and it is therefor possible to use them as 1100W power supplies for the R710.

Some unscientific and guesstimatory napkin math for helping you choose the 870W over the 570W power supply options (Just get the 870W. Seriously. Do it.):

- Guesstimated Storage Power Requirements:

- Each HDD will require about ~10W of power at high usage:

- 6 x 3.5″ HDD in 6 Drive Bay models = ~60W

- 8 x 2.5″ SAS HDD in 8 Drive Bay modules = ~80W

- Each SSH will require ~about 5W of power at high usage:

- 8 x 2.5″ SSD Drive Bay = ~40W

- Each HDD will require about ~10W of power at high usage:

- Guesstimated Memory (RAM) Power requirements:

- ~3W of power per 1.5V DDR3 RAM Module, 18 x 3W = ~54W

- CPU Power Requirements:

- Ranging from Maximum TPD 130W CPUs to 60W CPUs (doubled, for 2 x CPUs)

- ~260W – ~120W

- Ranging from Maximum TPD 130W CPUs to 60W CPUs (doubled, for 2 x CPUs)

- Assume ~75W minimum per PCIe card installed

- (1 to 4) x 75W = ~75W – ~300W

- Miscellaneous Factors: in Boot disks, USB disks, LCD, Chipsets, Network adapters, backplane, fans, etc for baseline system Power = ~150W to ~200W

Realistic Best Case power requirements: ~450W to ~500W

- 2 x 60W CPUs

- 8 x SSDs to backplane

- nothing but PCIe Storage Controller in PCIe slots

- All 18 RAM slots filled /w DDR3L

Realistic Maximum Power requirements: ~730W to ~800W

- 2 x 130W CPUs

- 6 x SAS HDDs to backplane

- 4 x ~75W GPUs + Storage Controller

- 2 x HDD SATA (onboard) Drives

- All 18 RAM slots filled /w 1.5V DDR3

Since most users will never need more than 800W of power, the recommendation is to use 2 of the 870W power supplies for redundancy. But again, in certain edge cases, or for cost-effectiveness and availability at the time of need, it may make more sense to purchase 1100W power supplies. We simply do not recommend the 570W due to the limitations of expandability for the system.

IMPORTANT FINAL NOTE ABOUT REDUNDANCY OF DELL R710 POWER SUPPLIES:

Every power supply that are Dell-branded for the R710 are “redundant” in design. This means that if one fails (any they will every few years or so), the system will continue to run on a single Power Supply, (without even so much as a reboot!). This is useful to know, in the event you want to set up a warning system with IDRAC6 notifications sending you an email if it detects a power outage or UPS Backup Battery failure (assuming you are using UPS Battery Backups, which you should) on at least one of the two power supplies.

Proprietary Dell Software, Hardware and Firmware “Features” for the Dell PowerEdge R710

IDRAC6 (Integrated Dell Remote Access Controller, Version 6)

Dell IDRAC comes in 2 possible flavors for the PowerEdge Platform (at the time of writing in 2025):

- IDRAC Express

- The IDRAC Express firmware has limitations baked into “IDRAC Daughter Card” that the IDRAC Enterprise does not. IDRAC Enterprise costs more at the time of purchase, so some R710s that came from a penny-pinching organization may have this limitation of useable features for remotely accessing and configuring your R710 via the dedicated RJ45 “IDRAC Console port”. IDRAC Express is not super awesome, but it does provide a few key features like remotely turning on and off the power to begin a new boot cycle or shutdown the R710 server for maintenance, security or power-saving reasons. It does also keep a “log” for remote access, power cycles, log clearing, fan health, temperature and other hardware related events.

- Full transparency from CE, we have never been crippled with IDRAC6 Express on the R710 before, because the R710 was one of the last PowerEdge models that allows for Dell’s IDRAC6 Enterprise License to be carried-over between physical “daughter card” modules without the hoop-jumping to transfer, activate and “phone home”. You just buy a (dirt cheap in 2025) physical module that has a tool-less install in the rear-right side corner of the R710, and Bob’s your uncle with the fancy “IDRAC Enterprise Powers”.

- IDRAC Enterprise

- One of the key features present in the IDRAC6 Enterprise that is not on the IDRAC6 Express version is the “Remote Console” feature.

- Remote Console: Back in the day, a Java applet could interface with IDRAC6 over the local network and you would have full access to the emulated video display (of the same video feed that would show as if you were looking at the screen connected to the VGA port in the front or back of an R710. Full keyboard and mouse powers, too.

- Java Insecurities: Since there have been SO many “hacks” and vulnerabilities found with these older versions of Java that the Dell IDRAC used, it is a hugely annoying pain to break all the security blockers to bypass the now-considered-obsolete encryption standards protections that the latest versions of Java simply do NOT want you to be able to access, anymore. That said… it CAN still be done, with some hacking of Java settings involved. This is generally not a good idea to do on a system that requires Java for other purposes than accessing IDRAC, though.

- Many people suggest using a separate VM with Windows Vista or XP and an ancient version of Java, just for accessing such older systems like IDRAC6 (Cisco Networking Routers and Managed Switches from the same mid-2000s to early 2010s are often like this, too). This way, you can not only isolate this VM with ease, but also spin it up and down as needed, and don’t leave it running on the network to create a juicy attach vector for a horrible human being that never got hugged as a child.

- Given the target audience of this content is likely home labbers, you likely have physical access to the R710 server if you need it, so this Remote Console “feature” might not be very important, unlike if you were trying to access this R710 from across the country on a Sunday at 4am in the year 2009, because your boss is having a meltdown over the server logs filling all the disk space and locking customers out from making new orders or accepting payments, and using the IDRAC Remote Console was your only recourse for rebooting the system so that you could “maintenance mode” your company application enough to last a few more days before upgrading hard drive capacity.

- We will slip it in here where no one will see it: Default Username is: root; Default Password: calvin (No one seems to really know why)

- Remote Console: Back in the day, a Java applet could interface with IDRAC6 over the local network and you would have full access to the emulated video display (of the same video feed that would show as if you were looking at the screen connected to the VGA port in the front or back of an R710. Full keyboard and mouse powers, too.

- Another “Good Idea” feature for IDRAC Enterprise is the “Firmware Update” option, right from the IDRAC6 console. Does it work? meh. Not usually. The one thing is seems to be able to update properly is the Lifecycle Controller firmware. You will want to use Lifecycle Controller Firmware 1.7.5.4 and the associated “repair”, which can be found on the Dell PowerEdge R710 Support Page under the Drivers tab, along with a ton of other confusing things, too.

- Version 1.7.5.4 is the very last (and therefor forever up-to-date) version of LCC firmware for the R710.

- The very last BIOS version was v6.6.0 and the final Remote Access Controller Firmware version was 2.92 (Build 05) (… we think?)

- You won’t be able to use IDRAC to perform the BIOS updates, but maybe some other things will work? Probably not.

- You will most likely have to figure out how to update your systems via Linux command line, or by installing Windows directly to the host OS and performing updates that way.

- There are a number of interesting things in the IDRAC console, at first glance, but we are not going to go too deep into IDRAC6 because its not really as useful as it once was, given the way its Remote Console is difficult/insecure to configure nowadays, the Firmware Updater options do not usually work, and aside from checking / clearing stats for voltages/power consumption, fan speeds, temps and Event Logs, its pretty janky. You can use it for powering on/off and rebooting, checking “System Inventory” is being recognized (and firmware versions) and perhaps the log to help find any issues with critical system components like storage controller and BIOS battery life. If you can sort out a usefulness for vFlash with a single 256MB partition, your probably a Dell fanboy.

- One of the key features present in the IDRAC6 Enterprise that is not on the IDRAC6 Express version is the “Remote Console” feature.

Dell vFlash (and the Dell IDRAC6 with vFlash Module)

Just don’t bother. Use bootable ISOs on the internal USB port with a 16ish+ GB multi-boot manager like YUMI or Ventoy if you need such a tool.

Dell vFlash can be used if either you have the SD Card module that fits in the top-front-left corner “under the lid” of the R710 (this was technically a hardware add-on that did not come standard, back in the day), or you might have the “IDRAC6 with vFlash”.