Awhile back, I invested in myself with a great deal on a Dell PowerEdge R710 server that had been recently decommissioned and refurbished. It was a phenomenal deal for the included Dual CPU configuration and a modest amount of DDR3 RAM included. Over the years, I slowly continued to scour the used server parts marketplaces for good deals on upgrades to the rapidly aging, but still INCREDIBLY functional R710. Initially, the server was delivered to me with a PERC 6/i RAID Controller without any hard drives, just the trays/caddies and a high-end-for-its-time DVD Burner.

I found an “I can’t believe this is the real price” deal on a 10-pack of high-endurance SSD that actually sell for a higher price today in 2025 (when New, in a sealed box) than what I paid for them back then. I was then armed with ~2TB of SSD disks and was anxious to start my journey with an ACTUAL Commercial Grade Server to run my Hypervisors, instead of using a “spare parts machine” that I hid under furniture close to the Modem/Router combo near the hallway that split the house. ESXi, XenServer, Debian 8/9 Server, Ubuntu 10/12/14 Server, CentOS, Red Hat, VirtualBox, Boxes, libvirt/virtsh/vmm, I was trying them all out. It was a fun, exciting time to be learning new things and finding creative uses for virtualized environments, right from my own homelab.

Right away, though, I found the “flashing yellow lights” on all the hot-swappable hard drive trays of the R710 were stressing me out. And the SCREAMING fans and incredible amount of heat exhaust pouring of the U2 rack-mountable server sitting on a spare room dresser did nothing to help. I had listened to other coworkers and read up on other “home lab experiences” with “self hosting” using a similar platform, and there were all kinds of interesting details to learn about: “fan scripts” that used IPMItools to “hack the fan speeds”, building your own temperature throttles with timer-chips that I’d used in college, server “placement” in weird areas to prevent hearing loss and excessive cooling bills, and on and on…

As it turned out, by “upgrading” the storage controller to the H700, I could not only increase the 2TB limit for hard drives, but also get rid of the “yellow danger warnings” that the PERC 6/i was throwing off because of my non-Dell “unsupported” SSD hard drives, too. And, not to bury the lead, but also as an MAJOR and totally unexpected benefit, QUIET THE FANS. So, obviously, I must have bought the H700 and that’s where the tale ends, right?… Not even close.

Ebay has *almost* everything you could possibly want or need, in *almost* every condition that you could imagine. RAID Storage controllers with High-Profile brackets, Low-Profile brackets, both brackets includes, with or without batteries and/or battery cables, with original heatsinks, upgraded heatsinks and/or/not fans, with or without SAS and/or forward and/or reverse breakout cables…, and on and on… What you might not be able to find on Ebay “that day” there were literally dozens of online retailers that had “Ebay stores”, but also had their own marketplaces, too. There were even a few somewhat-local-to-me companies that I could physically pick up my items from, too. The refurbishing business was amazing and bizarre, but I quickly learned that I would not have it any other way.

With all that said, I waited for the right combination of price and timing, and I executed my purchased of an H700… or… so I thought…

When the highly anticipated package finally arrived, I opened it up and immediately noticed some oddities in comparison to what I expected. What I ended up with, was actually a PERC 5/i… I just didn’t know it, yet. I tried to convince myself that I had made a mistake in ordering, somehow, and researched the listing and the device, itself, and compared it closely with my now removed PERC 6/i, side by side. While they used the same SFF-8484 Connector and the included SAS cables were the same SFF-8484 to SFF-8087 (to the R710 backplane), I was expecting everything to be SFF-8087 for the H700. Did I read a bad / misleading Item Description on Ebay and get suckered into buying something else? Was this maybe one of the many other non-Dell branded LSI/Avago cards that could possibly be even better than an H700? Nope. It was a total dud of a buy, since a PERC 5/i was a “downgrade”. But… I sent in some photos and exchanged a few messages with the seller, and he completely refunded me and didn’t even want it back. Apparently he mixed up the boxes and labels and 2 of us got something we did not order, but… it was free to me! So… now what, then? It’s PCIe 2.0 x8… would this work in one of my spare PCs?…

The short answer: Yes.

The long answer… is this entire page of my ramblings.

Since these PERC 5/i, 6/i and H700 Storage Controllers are PCIe 2.0 x8, I initially assumed that there was an absolute maximum-possible I/O performance of nearly 4000MB/s (500MB/s per PCIe 2.0 lane, by 8 lanes – the “theoretical limit”, less “overhead” of the other bus activity). But with the PERC 6/i, I was never able to break the 1000MB/s mark, no matter WHAT I tried with all my SSDs that were capable of ~500MB/s each, and RAIDed together in RAID 0, 5 and 10, different counts in each set, it didn’t matter. Usually under ~1000MB/s was the best I could get with the PERC 6/i (smaller chunks could “cache” and give higher numbers, but for Seq R/W only). So my 10-pack of SSDs was total overkill for the limitations of the Storage Controller. But now that I had a (completely free) PERC 5/i that was a super simple drop-in replacement for the PERC 6/i to test. What was the result? Horrible. Far worse, actually; Somewhere in the neighborhood of ~600MB/s with 8 SSDs in a RAID0.

But… if you consider that an enterprise business would most likely use RAID5 or RAID10 (Maybe even RAID6, if the storage controller supported it…) then these speeds suddenly made more sense, it terms of “max performance” back in the day when they were “as good as it gets”. Like with an 8x HDD disk RAID 0, a PERC 5/i would come very close to maximizing the performance of all of them. In a RAID10 with 8x disks with expected read speeds peaking around ~125MB/s, that’s 8x read speed of ~1000MB/s and the 4x write speed of ~500MB/s. With the PERC 6/i there was “Cachecade 1.0” that could benefit from having one or more SSDs on one of the ports and boost Read speeds of the RAIDed disk sets. With the H700, “Cachecade 2.0″ boosted Read AND Write speeds by having an SSD or two (in the Cachecade configuration). So, while in 2015ish, SSDs were still a budding technology with some endurance issues (and insanely high cost-per-GB), this actually made sense. And even the very best performance I’ve seen from a 3.5” HDD SATA or SAS disk, is around ~200MB/s, which is up considerable from the older HDD expectations of ~80 to ~125MB/s, each.

That is admittedly a whole lot of words and numbers to make this point: You can still find a few viable use cases for the old Old OLD OLDER Storage Controllers of yesteryear, even in 2025. The biggest limiting factor of the older pre-H700 storage controllers is the 2TB size limits for individual disks.

The Importance (or Lack Thereof) for Having a Battery for Your RAID Controllers

In a Production system with mission critical data, I HIGHLY recommend you spend the extra $30-$50+ for each RAID battery, AND have a rock-solid UPS Battery Backup System, too.

For a “lab”, though… you don’t absolutely NEED the batteries *IF*:

- You have an adequate backup and/or snapshot cycle that storage the most important data from your RAID arrays (see: 3-2-1 Rule)

- You are ok with not using the built-in cache and the (somewhat risky and) frowned-upon “RAID0 for each disk” paradigm and from then from the OS via software, you could:

- Use software RAID, instead, as it’s slightly more flexible and extremely similar in performance (nowadays)

- Use each disk independently and/or mix and match different file systems, RAID or ZFS configurations

- Use ZFS (The ZFS and TrueNAS Community is probably going to flip out if they read that, though…) with “the-very-frowned-upon-and-considered-dangerous” fake-HBA style of configuration that might also afford you the opportunity to use NVMe, Optane, SSD disk(s) for L2ARC caching performance gains.

- You don’t mind the performance hit from not having write-back (write-through, instead)

- You could also get away without using a battery and still use “Force Write-Back without Battery” (somewhat safely?) if you have a UPS/Battery backup for the entire system.

- For a “lab on a budget”, I HIGHLY recommend buying a better UPS Battery system over buying lots of small RAID batteries and cabling, given the price of a decent UPS is around $150-200. This is a better “spend” than a bunch of RAID controller batteries and cables, BUT, I am talking about “labs”, here.

I will re-iterate: In a Production system with mission critical data, I have an entirely different recommendation. Spend the extra $30-$50+ for each RAID battery, *if* you are still using RAID Controllers… AND have a robust UPS battery backup system, too(!!!)! Hopefully you have moved away from traditional hardware RAID in 2025, and you are relying on non-RAID HBAs with ZFS (or “The Cloud”), instead.

Production systems should be protected at (nearly) any and all manageable costs.

I’ve worked/contracted for a few companies that had absolutely zero (-$0.00-) budget allotted for such things, and it has never EVER ended well, despite my pleadings, warnings and general noise-making. For non-mission critical data that can be restored within a few hours, these sorts of “exceptions” are “ok”, particularly for a home lab. But if you are paying 3 or more Developers and/or Engineers to “sit around and wait” for a few hours as the Data Recovery scripts complete data restoration and rebuild Development and Testing Environments a few times a year because of brown-outs, power flickers or a “Weather Event”, that Tri-Yearly $50-$1000 spend starts to make a whole lot more sense, too.

How Large of a Capacity HDD Can Be Used on Older Storage Controllers?

I tested an 8TB HDD and a 12TB HDD on the PERC 5/i, 6/i and H700. The PERC 5/i and 6/i only “saw” 2TB of the entire drive. Bummer.

I tested a non-Dell 8TB (Seagate) HDD on the H700 with the latest firmware updates and it worked great.

BUT, have you priced SAS-capable breakout cables + 5-slot SATA power splitters and the “lot prices” for 2TB SAS and SATA hard drives on Ebay or Amazon, lately? (You really have to dig deep on Amazon, but there are a few hidden gems). You could buy everything you need for turning your old PC tower into a “NAS Box” with 16TB of RAID0 storage that works at about double the speed (~900MB/s) of a single SSD disk (SATA rev 3.0 is limited to ~600MB/s, but most SSD disks are limited to 450-520MB/s), where the PERC 6/i controller with a battery and bracket + 2 x 4 breakout cables and 8 HDDs would cost around $200-$300. That’s roughly the cost of a two brand new 8TB HDDs, with less redundancy possibilities and performance options, albeit lower power consumption and far less complexity. But point remains true, that there are still some edge cases for where a PERC 5/i or 6/i still have a little but of life left to give, at least for another year or two; maybe slightly longer, if the used market for 2TB drives continues to drop in price.

The H700, H710, H730, on the other hand, have even more upside and value in their longevity. Supposedly, using Dell-labeled HDDs (or those on the “supported list”) provides the highest levels of compatibility and function possible. In 2025, snagging an H700 with battery, battery cable and bracket is only about $40-$50. Without the battery and cable, you can get them for half (or even a third) of that, on some days.

There Is a Heavy Focus on Dell-Branded Storage Controllers and PowerEdge In This Post, But What About HP, IBM, Supermicro, etc?

HP and IBMs tend to suffer similar issues with “branded versions of firmware” that Dell provides for its customers. There are not a ton of Storage Controller Manufacturers (nor have there been over the years and decades), so the vast majority of Storage Controllers ended up being “made” by LSI/Avago/MegaRaid, and then “Branding Firmware” was done on mostly the EXACT same hardware shared across the industry, just with different nomenclature. LSI made A TON of variations for its Storage Controllers, but only “the cream of the crop” tended to get used by the top “brands” for server platforms.

The Dell PERC 6/i is equivalent to the LSI SAS1078

The Dell H310 is equivalent to the LSI SAS2008

The Dell H700 is equivalent to the LSI SAS2108

The HP H220 is equivalent to the LSI SAS9207-8i

The Dell HBA330 is equivalent to the LSI SAS9300-8i (and the LSI SAS9300-16i is essentially 2x SAS9300-8i controllers in one PCIe card)

I won’t go through the entire list, obviously, but you get the idea. ServeTheHome is a great resource for mapping your “Branded” Storage Controller to its OEM equivalent. I am not 100% certain how (in)consistent the naming conventions work in ALL of the MANY different LSI SASXXXX controllers, but generally the “first 2 digit grouping” of the LSI SASxXxx nomenclature and naming scheme seems to represent the either a PCIe bus version capability or at least the I/O speed capabilities, like PERC 6/i in our sample list, above.

The ServeTheHome Rock Star known as fohdeesha has a lot of EXCELLENT guides on how to cross-flash the H310, H710 and H810 (and most of their variants) into “IT Mode” with the far superior LSI firmware (instead of Dell’s), but you may run into issues with getting the PowerEdge series to boot without the Dell firmware on the storage controllers installed in the “dedicated storage controller sockets” (such as the R710 error of “Invalid PCIe card found in the Internal Storage slot!”). But (click the linked text to discover) there are some workarounds that you might be able to use to help you.

Finding a Compatible Version of MegaRaid Storage Manager (MSM) for Older LSI/Avago/Dell PERC Storage Controllers… and Battling With All The Variations of Modern Java…

I flailed and wailed quite a bit with this, and if you have arrived here to read my post, you must be having a similar experience with this, too. The versions I was coming across for MegaRaid Storage Manager (MSM) for Windows NT, Vista, 7, 8 and 0 (with noticeable lack of ME, which I am totally fine with…). The various versions ranged from v4+ to v8+ to v10+, a few v15+ and even a few v17+. It was all a disorganized, confusing mess. And that was even before Broadcom bought up LSI (and all its IP). All this confusion and this was just for the MSM software that was compatible with Windows 11, in 2025…

To add to it all, there is an old school FTP site that now-Broadcom (formerly LSI/Avago/MegaRaid/Probably-others-I-am-forgetting) still hosts as part of its acquisitions. I remember this FTP site from before Broadcom purchased LSI, and it seems to slowly be eroding away in usefulness. You can find an extremely bizarre mix of nested and poorly organized directory trees with very inconsistent user permissions for the “tsupport” user. There was a time where I accidentally stumbled upon some software from other companies, such as old versions of Windows and more (which probably was not kosher and should not have been hosted on a public FTP like that…), but in the most recent MSM adventure, I only found random slide decks and 10-20 year old files that were likely from employees that have been long gone, but the directories or user permissions were never revoked either… ah, the 2000’s… The spirit still lives on within a publicly accessible FTP server of one of the largest computer hardware conglomerate brands ever created in the history of Earth. I won’t link to the FTP site, directly… as I’m not trying to contribute to the problem, if there is one (still), but I will link to the page with the FTP links, and describe the JANKY workaround I had to do to get the MSM installation files I wanted to try out (and *ACTUALLY* ended up being the ones that works, too).

A positive and noteworthy point about Windows 11 and Dell-branded server hardware: The correct drivers actually auto-installed without any additional effort needed for the PERC 5/i, 6/i and H700, which was a bit shocking, considering the hoops I had to jump through to get an older HP Server PCIe 2.0 x4 Quad port 1Gb NIC adapter to work with Windows 10 or 11, the last time I tried to use Commercial Grade server hardware on a consumer desktop (which is ironically an HP-branded desktop… that did not go nearly as smoothly as installing Dell server component drivers did…).

I tested about a dozen different versions of MSM for this post by having both the PERC 5/i and PERC 6/i installed on the same system, and experimented with different versions of MSM to see if both cards would be recognized and “configurable”. The idea behind this was to test “the oldest” Storage Controller I had to test with (PERC 5/i), and make sure it was still possible to use them outside of the now “For Sale” Dell PowerEdge R710, which now has “Dual H700 Storage Controllers” configured in it with 20+ hard drives. (If you would like the details as to how I pulled that off in a system that is only designed for, at max, 8x hard drives, feel free to reach out with a comment or message and I can get into the details about it. It’s VERY cool how I pulled it all off. It’s still for sale, too, as of Jan 2025. Just sayin’.)

An VERY Important Note About Cooling Requirements for Older Dell PERC 5/i and 6/i (and Similar) Storage Controllers:

Both of these storage controllers have a requirement for a low-to-moderate amount of airflow across the primary (largest) heatsink on the controller adapter cards. It doesn’t need a lot of airflow to prevent overheating, but “some”. A general “amount” is about 15 CFM, which should be easily accomplished by just about any common size of PC fan. Consider that the PERC 5/i and 6/i were designed to be used in the enclosed space of a PowerEdge R710 or similar chassis where the airflow is generated by the “front-to-back airflow” array of fans near the center of the server chassis. Without any airflow, the heatsinks with get EXTREMELY hot to the point that they might actually burn you, melt something, or otherwise experience thermal failure that may or may not cascade throughout your system.

Which MSM Versions ACTUALLY Worked With Older PERC Storage Controllers, In Testing?

The highest version I found that still worked for both the Dell PERC 5/i and PERC 6/i was LSI MegaRaid Storage Manager v11.06.00.03. It worked for BOTH Storage Controllers, too, but with some caveats during installation… For whatever reason, there was a series of errors during the install that I just kept clicked Next/Ok/Continue (Instead of Cancel/Stop) and despite all of these errors, the MSM Software v11.06.00.03 actually installed seemingly fine (as good as it gets for this old stuff, anyway). I didn’t try EVERY possible feature, and the software is a bit clunky and not super intuitive, but eventually you get the hang of it. It’s moderately complicated to explain how everything works, as it also depends on the configuration you want, but the MegaRAID Storage Manager software version 11.06.00.03 seems to actually work fine, despite all the “noise” it made during installation. You will navigate to the “Physical” tab (next to Dashboard) and then by right-clicking in the appropriate spot (on the PERC xxx Controller “branch”) you will see all the familiar options from the BIOS boot (Ctrl+R or C) and hopefully muddle your way through a RAID configuration. Take a few moments to scout everything out and play around with different menus and settings before you “set it and forget it”. There might be some bugs or strange issues that you will want to be aware of. These bugs seem to be slightly different between different versions (or more or less severe).

The lowest version of LSI/Avago MegaRaid Storage Manager (MSM) that I found to work for both the Dell PERC 5/i and PERC 6/i, was LSI/Avago MegaRaid Storage Manager v8.00-5, but again… with some caveats…

In order to even get to the installer for a lot of the earlier-than-version-11 MSM, you have to “hack” the silly “Microsoft Installer Protection” annoyances by doing some Windows Registry hacking.

#TODO:If I find the time, I might try to create a simple reg.key file or create a PowerShell or .bat(ch)/CMD script that will take care of some of these things for you, but you are going to have to reboot, anyway (or at least log out and back in again?) for this tweak to take effect:

- Open the Registry Editor (regedit.exe)

- In the search bar / Registry tree you want to find:

- Computer\HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System

- Edit the “EnableLUA” DWORD 32-bit Value to be “0” instead of “1”

- BIG WARNING: This Disabled User Account Controls, which is considered a big deal security risk, so you probably want to re-enable this after you complete the install.

- Reboot

Ok, But Where are You Downloading These Older Versions of MegaRaid Storage Manager (MSM)!?

MegaRAID downloads sorted by download type (firmware, MSM, MegaCLI, Driver) – https://www.broadcom.com/support/knowledgebase/1211161499278/megaraid-downloads-sorted-by-download-type-firmware-msm-megacli-

That Hyperlink/URL has what you need.

TAKE NOTICE that there are the Supported Storage Controllers list in each “Release section” and that the first-and-only listed version is 11.12.01.01 and it supports “CacheCade Pro 2.0”. I think was supported starting with the H700 Controller. It is the first one that I know of that supported “CacheCade 2.0” – But I am not going to get too deep into the subject of CacheCade. It is an interesting feature that does help with performance, but at the expense of using a chunk of one or more SSD disk(s’) space as a “cache” for the larger magnetic/”spinning rust” HDD drives, which may or may not be worth it, depending on your use case. For the pre-H700 controllers, I’d say “no way”, since most modern HDDs in a 5 or 6 disk set (and made after 2010) are likely to saturate / max out the perform limits of anything older than the H700, anyway. So you may as well keep the SSD for “more fast” storage. That said, it might be beneficial for a 6TB or 9TB (6 x 2TB HDDs in RAID5 or RAID10) Array where you would rather have the larger Array be faster AND have some redundancy/resiliency that a single disk or 2x disk RAID0 array. CacheCade is an interested topic worth exploring on your own, but this guide is already getting absurdly long. It’s a topic that deserves an entire guide all of its own. DYOR or reach out and ask me to cover it more in detail (if I still have the spare disks and controllers to test with by then).

Why Does My Browser Choke When I Click the Links to Download MSM?

SUPER annoying, right? “Back in the day” you could use your web browser as FTP client, and those links would have literally logged you into the MegaRaid FTP site and allowed you to navigate (traverse the directories of) the FTP server with the files you were after. But now, in 2025? It feels like you almost have to be a bit of a hacker to get these files for MSM that you are after… So… here are some “tricks” that you can use, from the bygone era of “Trust Each Other On the Internet”.

Ok, so I changed my mind about showing you these tricks… but let me describe why I am still a bit reluctant… While I don’t like how there has been little to no effort to maintain these older corners of the internet with absolutely pivotal software that will keep still-useful hardware from the landfills and ocean floors, “I have seen how these things go, before…” and I you have to understand that we are actually still lucky software this old from a large corporation is available at all. Dell is pretty horrible about making things harder to update and install for its R710-related software “on purpose”. So, the fear is that if too many people abuse or exploit any potential vulnerabilities of this long forgotten server (seemingly) then LSI-now-Broadcom might shut it down and remove it, completely. I do not want to contribute the to possibility in any way at all, so this is my “I’m trusting you (stranger, on the internet)” pre-amble. I will just provide some pretty obvious hints as to how you can more easily snag these files in 2025 and beyond (but I highly recommend you save these files to a location you will remember).

Why are the links broken? Because browsers used to have built-in FTP support, but not in 2025: https://www.howtogeek.com/744569/chrome-and-firefox-killed-ftp-support-heres-an-easy-alternative/#windows-and-macs-have-built-in-ftp-support

Notice that Chromium and Firefox (and by extension, Chrome and Edge, too) no longer have a built-in FTP client (since 2021). LAME, right? Well, its arguable more lame that FTP is even required here… but let’s cope with what we can.

Right-click on the “FTP” (or README.txt) hyperlink in the linked page, above for MSM downloads. You can paste that URL, *along with the corrected user:pass combo that I deliberately obfuscated (barely… so its painfully obvious how to do this): In either PowerShell or any Linux or MacOS prompt, you can do this:

curl ftp://tsupp*rt:tsupp*[email protected]/private/LSI/megaraid/11.12.01-01_Windows_MSM.zip --output 11.12.01-01_Windows_MSM.zipNotice the --output xx.xx.xx-File_Name_MSM.zip part at the end that makes the curl magic work in this context. Whatever the file is that you are curl-ing can be named with the –output=<name-goes-here> bit. I just used that same name as what the version I was testing with, but you may want a different version that matches your specific controller, so adjust accordingly.

curl ftp://……. –ouput=msm-vx.xx.xx-installer_fromFTPftw.zip

You can also do this using Windows Explorer, a network-capable File Manager in Linux, like Thunar and/or Gnome, or even a traditional FTP client (eg, FileZilla, WinSCP, CyberDuck)

Paste this FTP URL with path/directory, just without the filename included (and the correct creds, too, of course):ftp://tsupp*rt:tsupp*[email protected]/private/LSI/megaraid/

You should now be able to navigate the FTP server again, like its 2021 (more like 2012… but… just get the files you need. There is still some work ahead).

What To Do With The MSM.zip File, Next

Extract, Extract, and Extract some more, until you find the “DISK1” directory, from where you will see “setup.exe” and “MSM.msi”

NOTEWORTHY AND IMPORTANT: There is usually a README.txt (or 2… or 3…) that is absolutely PACKED with useful information, such as the LSI models of specific Storage Controllers that the release/version should support. There is also sometimes very specific details for how to install and configure the recommended versions of Java. Many other useful tidbits, too. LSI really did do an EXCEPTIONALLY good job with their release notes, and this was back when Release Notes were not very well kept, too. Much respect goes to the LSI developers for being diligent and doing this! This effort has likely saved many many thousands of these storage controllers from becoming e-waste, so they are literally saving the planet by creating these high-quality release notes. You want to save the planet, too, right? (Write better release notes, then…)

Getting back to the installer… I like to use the *.msi files on Windows, whenever possible, but sometimes the .exe files are what ends up working, instead. MS products are funny like that, sometimes. Such as when you double click to install these older MSM versions, you will likely be prompted to install “vcredist_x86” as a pre-requisite before you can continue on with the MSM Installer. So go ahead and do that, when prompted to by the InstallShield Wizard.

Note that you may or may not need to install some version of Java Run-time Environment (JRE) to a specific location and set up your PATH variables for versions of MSM versions that are v12+ – Here is a good hint for a jump-off point if you have an “old, but not 11th Generation old…” Controller Card that uses a higher-than-v11 MSM: Installing LSI / Avago Megaraid Storage Manager – SohoLabs

Also noteworthy, is that for Versions of MSM older than ~v13 or so seem to require JRE and path configurations on your system to “see” the “server” for the RAID Controller to be configured by the MSM desktop app software. It’s quite a bit of a rabbit hole, so I will cut this post here, and let you write to me if you get stuck or have insights to a specific controller card having any particular issues. I can try to expand on this detailed list by adding any “gotchas” you share with me and perhaps I can help you work through them, too.

What About Flashing Different Firmware to Different Cards for Different Features and Benefits?

While it is true that you can flash the “OEM LSI Firmware variants” to the “Big Branded Storage Controllers” in many different cases, that topic deserves a lengthy and detailed post all of its own. It is typically only marginally beneficial in somewhat rare edge cases though for cards that are less than PCI 3.0 capable. Such as that you *might* be able to “flash” a RAID storage controller to allow for some additional “RAID modes” that perhaps a “big brand firmware” excluded and the “OEM firmware” included. This is most often RAID 6, 50 and 60. These RAID modes require a lot of drives and do not offer a great deal of storage capacity, so they typically provide very little benefit to flash and lose the “Branded firmware compatibility”, unless you are buying mixed branded hardware. You might also find some PCIe 3.0+ storage controllers that you will benefit from from flashing away from “IR mode” to “IT mode” so that you can then use certain compatible RAID storage controllers as an HBA, instead of a RAID-only controller. You will notice that Storage controllers that have this “flexibility” are far more highly sought after, because HBA configurations allow for “proper ZFS”, which is ideal in 2025 and beyond (as RAID is no longer getting any significant innovation).

So, if you really want to learn more about how to “Flash Storage Controller Firmware”, send me a message and I’ll try to help you out or do some deep dives with a new and dedicate post for that topic.

Performance Testing Results Using the Dell PERC 6/i and 5/i on Windows 11 in 2025

A note about using x4 slots for storage controllers that are designed for x8 slots: In my personal observations in the limited testing I did for the PERC 5/i and 6/i Storage Controllers on PCIe 2.0 and 3.0 capable x4 slots and x8 slots, I only noticed about a 10% to 15% decline in maximum I/O using x4 slots. I found this somewhat interesting, considering you might likely expect the variance to be closer to 50%. That said, I won’t be including these “x4 slot performance results” since I won’t recommend further crippling an already well-past-its-prime storage controller. But I did want to at least mention this in the event there are those with open x4 slots that would find this minimal performance loss acceptable for their use case.

As you might have already guessed, the x8 slot I/O performance results are far from impressive. I will start with what I observed with testing the PERC 5/i. The PERC 5/i never performed any better than ~600MB/s in any RAID configuration, nor with any number of SSDs in a striped array of any kind. This means that no matter how many SSDs you try to add to the PERC 5/i, you will only ever realize single SSD disk speeds, AT BEST. If you happen to have 8 or less HDDs under 2TB in capacity and a PERC 5/i with proper breakout cables/backplanes then maybe you could barely justify using the PERC 5/i controller in 2025. But I am not going to recommend it.

Typically when moving files larger than 128MB each, the performance for most disks will drastically decline, but in the case with the PERC 5/i. And assuming that you use 5 or more HDDs that have a top speed of r/w data I/O of ~100MB/s, you will top out the performance of the PERC5/i in a RAID 0 configuration.

So the only real viable use for the Perc 5/i in 2025 is adding a few additional HDD hard drives to an older system.

Keep in mind that disks over 2TB are not recognized as more than 2TB to the PERC 5/i and PERC 6/i. So, if you are adding more than 5 HDDs, you should use RAID 5 or RAID 10 for the redundancy benefits. To make the usefulness and cost-to-performance benefits even worse for the PERC 5/i, you will struggle to find a cable such as an “Serial Attached SCSI SAS Cable – SFF-8484 to 4x SFF-8482 with LP4 Power” that will allow for SAS disks to be used instead of only SATA HDDs or SSDs. Even if you do manage to find this difficult-to-find-and-therefor-expensive cable, you will pay FAR more just for the cable than you would the entire controller. That said, having this particular cable would allow for you to use SAS HDD disks of 2TB or smaller, which are extremely cheap on used marketplaces. But for the same (perhaps even less!) spend for all these components, you are far better off buying the Dell H700 or better, and the related breakout cables with SAS compatible power adapters built-in. This is because the H700 supports 8TB+ sized disks and has a maximum I/O of around ~1600MB/s, which is nearly triple that of the PERC 5/i.

Given the low performance of the PERC 5/i, I would *NOT* recommend using a PERC 5/i controller in 2025 on a system with a bus speed capability of PCIe 2.0 or better. It’s usefulness and performance limitations do not make it a good choice for even a cheap option to expand systems with PCIe 2.0 or better capabilities. It does have limited usefulness for older systems, or in the event you have an “extra one just laying around”. It might also be useful as a testing device or importing arrays between other PERC controllers. But that’s about it… I DO NOT RECOMMEND THAT YOU BUY A PERC 5/i in 2025. If you want an extremely cheap option to power 1 to 8 x HDDs of 2TB is size or smaller in 2025, even the similarly-priced-as-used PERC 6/i is capable of about ~1150MB/s at peak I/O. But for only a few bucks more, you could get the H700 or better. What you probably want for better future-proofing is something like the HBA330 with the PCIe 3.0 bus (NOT the H330 or H200, which are near-equally awful to the PERC 5/i performance).

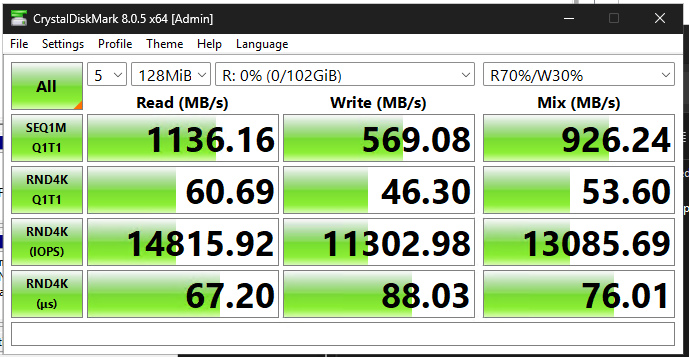

Here is a screenshot of my performance testing using the PERC 6/i with 5x SSD drives configured in a RAID0 array with all 8x PCIe lanes in a PCI3 3.0 capable system (The PERC 6/i is only PCIe 2.0). CrystalDiskMark performance test parameters are “Random Test Data”, Real-World Performance +Mix with 5 sets of 128MiB for I/O testing (averages shown below).

This is not great performance, but the Perc 6/i has FAR better performance than the Perc 5/i. Given that most 2TB or less HDD disks that are 4500K to 7200K rpm rotational speed have an average I/O speed of around ~80 to ~150MB/s (depending on a variety of factors such as date of manufacture, cache size and workload), it is plausible that using an 8x disk RAID0 array would marginally “max out” the I/O performance capabilities of the PERC 6/i for smaller sized workloads (128MiB or smaller files).

Additionally, some (if not most) of the PERC 6/i RAID Controllers also have the “CacheCade feature” which means adding a single smaller sized SSD (like a 120 – 256GB SSD, for example) could boost the performance of a 2-to-7 HDD disk RAID1, 5 or 10 array (in some use cases).

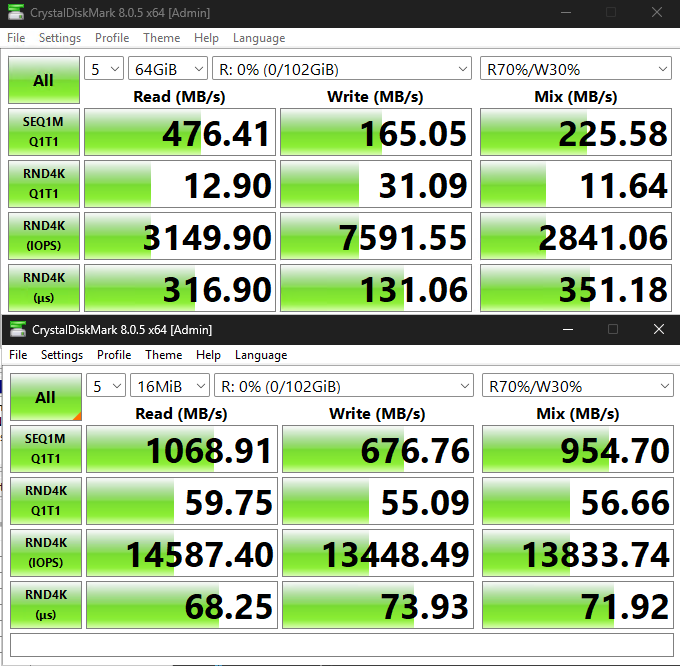

In my opinion after my testing, the PERC 6/i could still be a viable option for having a modest database or less than 10 minimally-to-modestly active Virtual Machines, etc. So if you need “something cheap”, the PERC 6/i might fit your use case. But a PERC 6/i in 2025 is less than optimal… Here is a “high-to-low” comparison for CrystalDiskMark testing using 64Gib and 16MiB test sizes, with all other test parameters unchanged from above:

Due to this very low performance and the lowering costs of other controllers and SSD disks, particularly in used marketplaces, I cannot recommend the PERC 6/i in 2025, either. Even though you might be able to piece together a 7 disk RAID 5 or 6 disk RAID 10 with 1 or 2 SSDs used for CacheCade for ~$200 in 2025, it is NOT a good option. This is because the same “~$200 spend” could get you a used HBA330 with breakout cables and 4+ brand new SSD drives between 240GB and 2TB in capacity (depending on the brand and sale). That would be a FAR better future-proofing spend with considerably higher performance and more capacity options for both SSDs and HDDs that are over 2TB each.

All of that said, this post was written for those who may already have these older controllers “just laying around”, or an older system that you do not want to spend much money on updating. As of 2025, I recommend AT MINIMUM the H700 or better for PCIe 2.0 systems, and for better future-proofing I recommend at least the HBA330 or better for PCIe 3.0 systems and/or if you would like to avoid using RAID and “upgrade” to ZFS instead. For more future-proofing, have an Dell H730 PCIe 3.0 or better is possible for ~$40 to $100, often with battery and cables included. What is nice about the H730 is the “HBA Mode” would eliminate the need for the “RAID battery”, and offers a “closer to ideal (but-not-quite)” option/capability for using ZFS.

Note about the “ZFS-with-an-H730” debate: There is still a great amount of debate as to whether the H730 controller allows “enough” of a passthrough / HBA mode for ZFS to fully benefit, but the S.M.A.R.T. data DOES passthrough in for the H730 in HBA mode, hence the “debate”).

Why Am I Using Such Low-Ball Suggestions for Older Tech?

You want to avoid “overkill” where your capacity and I/O capabilities of your expensive SSDs or large HDDs exceed the limits of older PCI / PCIe 1.x / PCIe 2.x systems. There are 100s of other posts and reviews/tutorials that will suggest you “spend more!”, but often the additional expense does not equate to any additional benefits. Such as buying a PCIe 4.0 capable H750 controller for an R710: You would never achieve anywhere close to maximization of I/O because of the limited PCIe 2.0 bus and maximum x8 lanes on the R710 despite the H750 having over twice the I/O bandwidth of the even the HBA330.

If you plan to keep your current hardware for as long as possible (or hold its collective resale value) you will want to “max out” its spec potential, but not “overdo it” and waste capacity, unless the cost is low enough for it to not matter. Such as buying an H730 to use in an older PowerEdge R710, you will never quite realize the full capabilities of the H730 storage controller in an R710, but the costs are minimally higher for the better adapter, so why not? Use your best judgement, and take the info from this post with you in your DYOR journey.

Hopefully this pages helps save you some headache, research time and maybe even a few PERC 6/i and H700 or better storage adapters from the landfills a little bit longer.

That’s all for now!